Musing 103: UI-TARS: Pioneering Automated GUI Interaction with Native Agents

Fascinating work out of ByteDance Seed and Tsinghua

Today’s paper: UI-TARS: Pioneering Automated GUI Interaction with Native Agents. Qin et al. 22 Jan. 2025. https://arxiv.org/html/2501.12326v1

One of the most exciting developments post-LLMs is rapid progress in autonomous agents. These agents, as their name suggests, are supposed to operate with minimal human oversight, perceiving their environment, making decisions, and executing actions to achieve specific goals.

Humans are agents, and one of the things we like to do is interact with graphical user interfaces (GUIs). Developing AI-based agents that can interact seamlessly with GUIs, which require the agent to perform tasks that rely heavily on graphical elements such as buttons, text boxes, and images, has emerged as a frontier problem. Today’s native GUI agent models often falls short in practical applications, causing their real-world impact to lag behind its hype.

These limitations stem from two primary sources:

(1) The GUI domain itself presents unique challenges that compound the difficulty of developing robust agents. On the perception side, agents must not only recognize but also effectively interpret the high information-density of evolving user interfaces. Reasoning and planning mechanisms are equally important in order to navigate, manipulate, and respond to these interfaces effectively. These mechanisms must also leverage memory, considering past interactions and experiences to make informed decisions. Beyond high-level decision-making, agents must also execute precise, low-level actions, such as outputting exact screen coordinates for clicks or drags and inputting text into the appropriate fields.

(2) The transition from agent frameworks to agent models introduces a fundamental data bottleneck. Modular frameworks traditionally rely on separate datasets tailored to individual components. These datasets are relatively easy to curate since they address isolated functionalities. However, training an end-to-end agent model demands data that integrates all components in a unified workflow, capturing the seamless interplay between perception, reasoning, memory, and action.

Today’s paper introduces a native GUI agent model called UI-TARS. Based on the challenges above, core capabilities of a ‘native’ agent model include: (1) perception, enabling real-time environmental understanding for improved situational awareness; (2) action, requiring the native agent model to accurately predict and ground actions within a predefined space; (3) reasoning, which emulates human thought processes and encompasses both System 1 and System 2 thinking; and (4) memory, which stores task-specific information, prior experiences, and background knowledge.

A demo case study of UI-TARS is illustrated in the figure below where a user is trying to find flights:

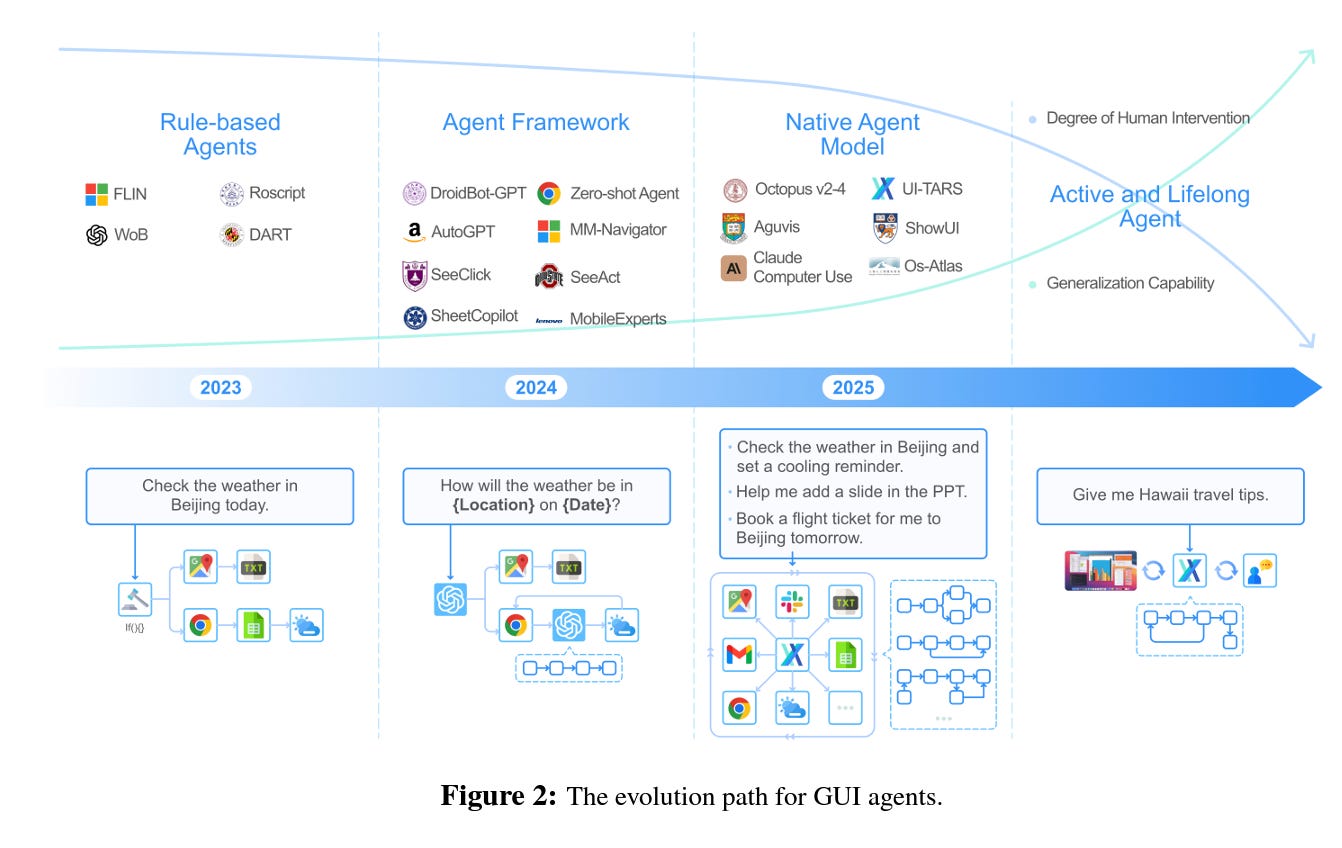

GUI agents are particularly significant in the context of automating workflows, where they help streamline repetitive tasks, reduce human effort, and enhance productivity. At their core, GUI agents are designed to facilitate the interaction between humans and machines, simplifying the execution of tasks. Over the years, agents have progressed from basic rule-based automation to an advanced, highly automated, and flexible system that increasingly mirrors human-like behavior and requires minimal human intervention to perform its tasks. As illustrated in Figure 2, the development of GUI agents can be broken down into several key stages, each representing a leap in autonomy, flexibility, and generalization ability.

Agent frameworks using the power of large models (M)LLMs have surged in popularity recently. This surge is driven by the foundation models’ ability to handle diverse data types and generate relevant outputs via multi-step reasoning. Unlike rule-based agents, which require handcrafted rules for each specific task, foundation models can generalize across different environments and effectively handle tasks by interacting multiple times with environments.

Traditionally, agent models relied on pre-programmed workflows, driven by human expertise, which makes such frameworks inherently non-scalable. They depend on the foresight of developers to anticipate all future variations, limiting their capacity to handle unforeseen changes or learn autonomously. They are design-driven, meaning they lack the ability to learn and generalize across tasks without continuous human involvement. This is why the authors focus so much on native models, which are data-driven rather than design-driven.

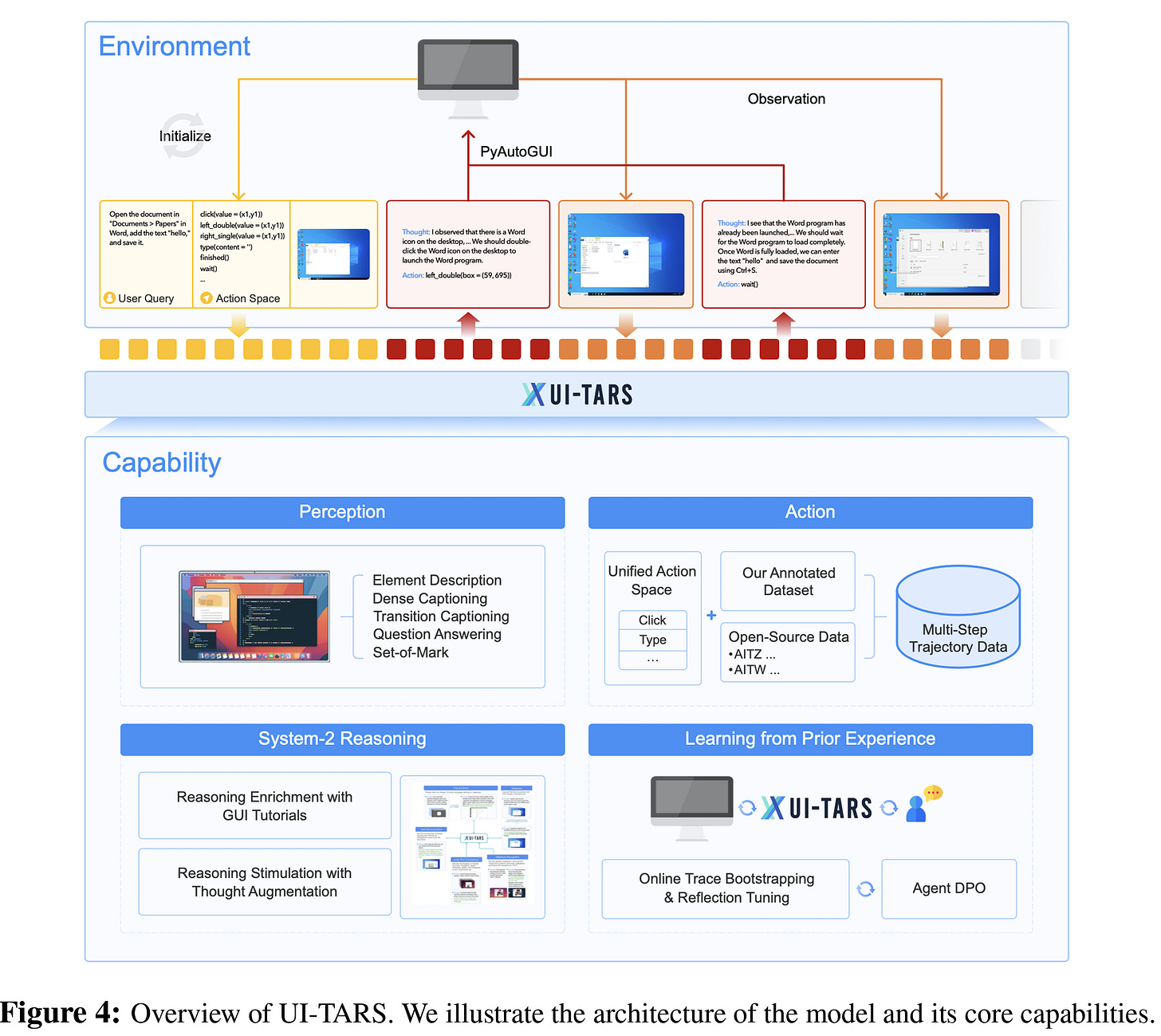

Let’s get into some architectural details of UI-TARS. The figure below provides an overview of the model and its core capabilities:

As illustrated, given an initial task instruction, UI-TARS iteratively receives observations from the device and performs corresponding actions to accomplish the task. At each time step, it takes as input the task instruction, the history of prior interactions, and the current observation. Based on this input, the model outputs an action from the predefined action space. After executing the action, the device provides the subsequent observation, and these processes iteratively continue. To further enhance the agent’s reasoning capabilities and foster more deliberate decision-making, the authors also integrate a reasoning component in the form of “thoughts”, generated before each action. These thoughts reflect the reflective nature of “System 2” thinking. They act as a crucial intermediary step, guiding the agent to reconsider previous actions and observations before moving forward, thus ensuring that each decision is made with intentionality and careful consideration.

This approach is inspired by the ReAct framework, which introduces a similar reflective mechanism but in a more straightforward manner. In contrast, the integration of “thoughts” in UI-TARS involves a more structured, goal-oriented deliberation. These thoughts are a more explicit reasoning process that guides the agent toward better decision-making, especially in complex or ambiguous situations.

To address the challenge of data scarcity and ensure diverse coverage, a large-scale dataset was constructed, consisting of screenshots and metadata from websites, apps, and operating systems. By leveraging specialized parsing tools, rich metadata—such as element type, depth, bounding box, and text content—was automatically extracted during the rendering of the screenshots. The approach combined automated crawling with human-assisted exploration to capture a wide array of content. This included primary interfaces as well as deeper, nested pages accessed through repeated interactions. All collected data was systematically logged in a structured format comprising screenshots, element boxes, and associated metadata to ensure comprehensive coverage of diverse interface designs.

The data construction process followed a bottom-up approach, beginning with individual elements and progressing toward a holistic understanding of interfaces. By focusing initially on small, localized components of the graphical user interface (GUI) and gradually integrating them into the broader context, this method minimized errors while maintaining a balance between precision in recognizing components and the ability to interpret complex layouts. Using the collected screenshot data, five core task datasets were curated, as illustrated in Figure 5 below.

There are many more details and formalism in the paper, but suffice it to say that perception is a very important part of the system. Next comes unified action modeling and grounding. The de-facto approach for improving action capabilities involves training the model to mimic human behaviors in task execution, i.e., behavior cloning. While individual actions are discrete and isolated, real-world agent tasks inherently involve executing a sequence of actions, making it essential to train the model on multi-step trajectories. This approach allows the model to learn not only how to perform individual actions but also how to sequence them effectively (system-1 thinking).

Here comes an important design choice. Similar to previous works, the authors design a common action space that standardizes semantically equivalent actions across devices (Table 1), such as “click” on Windows versus “tap” on mobile, enabling knowledge transfer across platforms. Due to device-specific differences, they also introduce optional actions tailored to each platform. This means that the model can handle the unique requirements of each device while maintaining consistency across scenarios. They also define two terminal actions: Finished(), indicating task completion, and CallUser(), invoked in cases requiring user intervention, such as login or authentication.

Other details that are described in the paper include mechanisms for improving grounding ability (i.e., the ability to accurately locate and interact with specific GUI elements) and infusing System 2 reasoning with GUI tutorials and thought augmentation. These are fascinating additions to making the system more robust and capable. I encourage interested readers to peruse it more closely in Section 4.4 of the paper (page 17). Some of the reasoning patterns that are used in thought augmentation are covered by the figure below:

Let’s move on to experiments. The authors compare UI-TARS with various baselines, including commercial models such as GPT-4o, Claude-3.5-Sonnet, Gemini-1.5-Pro, and Gemini-2.0, as well as academic models like CogAgent, OminiParser, InternVL, Aria-UI, Aguvis, OS-Atlas, UGround, ShowUI, SeeClick, and the Qwen series models QwenVL-7B, Qwen2-VL (7B and 72B), UIX-Qwen2-7B, and Qwen-VL-Max. First, let’s look at UI-TARS’ performance on GUI perception benchmarks:

UI-TARS models achieve outstanding results, with the 72B variant scoring 82.8, significantly outperforming closed-source models like GPT-4o (78.5) and Claude 3.5 (78.2),as shown in Table 3 above. It also has advantages on the mobile screen understanding task (ScreenQA-short). Concerning mobile tasks, the performance is also impressive. The authors use AndroidControl and GUIOdyssey: AndroidControl evaluates planning and action-execution abilities in mobile environments. This dataset includes two types of tasks: (1) high-level tasks, which require the model to autonomously plan and execute multistep actions, and (2) low-level tasks, which instruct the model to execute predefined, human-labeled actions for each step. GUIOdyssey focuses on cross-app navigation tasks in mobile environments, featuring an average of more than 15 steps per task. The tasks span diverse navigation scenarios with instructions generated from predefined templates.

We find from Table 8 above that UI-TARS-72B achieves SOTA performance across key metrics. Even smaller parameter models like UI-TARS-7B achieves performance superior to strong baselines like Claude. Ultimately, these results show that it exhibits good performance in both website and mobile domain, which truly makes it more general purpose.

I can point to several other results, but I’ll close with this figure that is right on Page 1 itself. Relative improvement of UI-TARS is high, and it is clearly much better than generic use of GPT-4o and Claude. This shows that building a GUI agent is more challenging than meets the eye, but is already possible using current technology. And we don’t need to train an agent for every single environment: the authors’ design choices suggest a level of generality that works across mobile, Web and cross-app domains without requiring upfront labor for each new domain.

In closing this musing, what I really like about this work is that it pushes us closer toward what I like to refer to as the Star Trek age. I don’t think automating GUI actions will take anyone’s job away. We all have to deal with GUIs. But imagine if you didn’t have to click and navigate so much on your screen, which is cognitively taxing, even if it doesn’t seem like we’re doing much. Click this button, close this window, minimize this screen, then click again…you get the gist. Anything that taxes us less cognitively and makes us more productive (and quicker), is only a boon. That’s the promise of good technology. I don’t believe this is the final product yet, only the beginning. Like any system deployed in the wild, problems are likely to arise that perhaps we don’t see reported on in the paper (or they are just unknown). But the robustness of the results that were reported are already very promising.