Musing 111: An LLM-Based Approach for Insight Generation in Data Analysis

Interesting paper out of Aily Labs and EURECOM accepted for publication at NAACL, 2025

Today’s paper: An LLM-Based Approach for Insight Generation in Data Analysis. Perez et al. https://arxiv.org/pdf/2503.11664

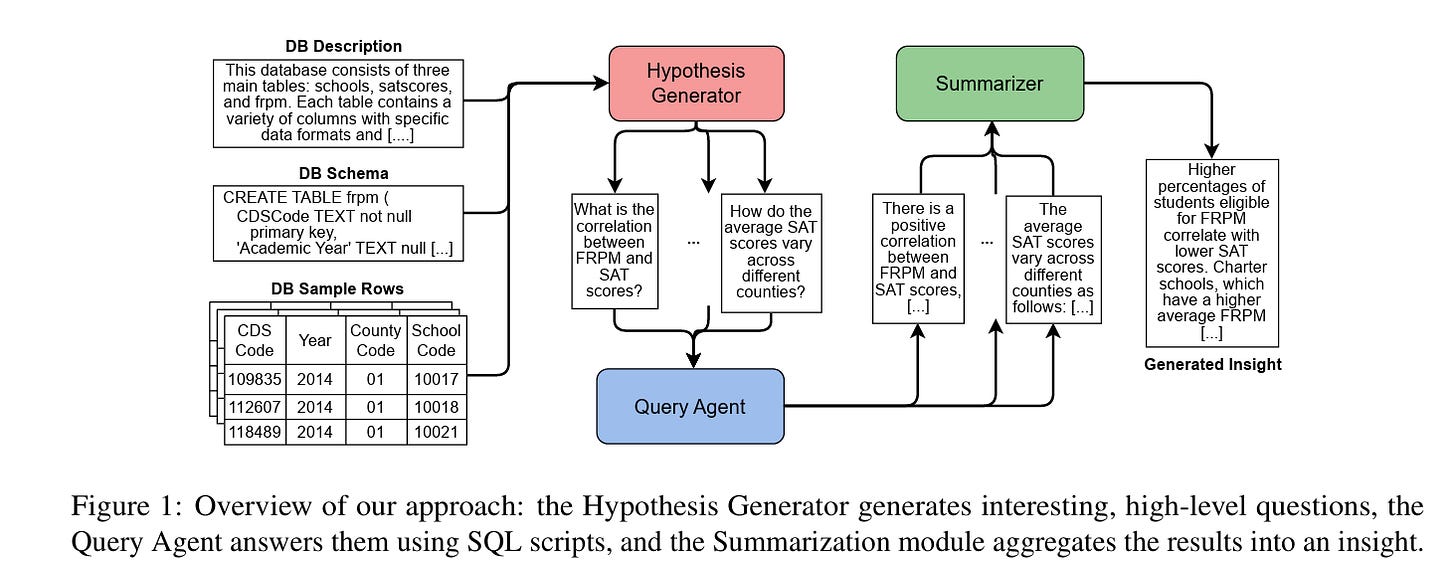

Insights from data are essential to make informed decisions. This is crucial in business contexts, healthcare, or scientific research, where better insights lead to more effective outcomes and advancements. For example, given the database in Figure 1 below , a valuable insight would be “Higher percentages of students eligible for free or reduced-price meals (FRPM) correlate with lower average SAT scores in reading, math, and writing.”

Generating insights traditionally requires significant manual effort. Analysts must meticulously preprocess, explore, and refine the data. It also requires specialized knowledge of the domain to analyze and interpret the data, further complicating the task. This makes the process both time-consuming and resource-intensive.

Today’s paper introduces a novel framework leveraging Large Language Models (LLMs) to automatically generate insights. However, the process of extracting valuable insights from raw data with LLMs is non-trivial:

First, LLMs have limited context size and cannot be fed with entire databases.

Second, LLMs still struggle in processing structured data.

Because of these issues, the authors adopt an agentic approach, where external tools (SQL scripts, in their case) are used by the LLM to handle structured data. While this is a popular solution, existing methods mostly produce “shallow” insights, such as the identification of simple trends or outliers. The authors define an insight I as a short text derived from a database D. They set that it should not be longer than 3 sentences based on experiments with their product, which indicate that longer text is less effective in maintaining reader interest.

The authors generate insights with a 3-step architecture, as in Figure 2 below , that (1) generates high-level questions and splits each of them into simpler subquestions that are easier to answer, (2) then answers and validates them using SQL to (3) finally post-process them into the final insight.

The Hypothesis Generator uses a multi-step approach to generate questions that are both interesting and easy to answer for the given database. It is composed of two elements. A high-level generator HL-G uses a prompt to generate overarching questions h_i. The high-level generator uses a short description of the database (short(D_info)) that they shorten to prevent constraining the model’s response with database details, enabling more exploratory questions within the domain. A low-level generator LL-G splits the high-level question h_i into subquestions s_ij. By using specific prompting and the full description and schema of the database as input, they constrain the model to formulate more precise and concrete questions, which are easier to answer in the next step.

Moving on to the query agent, the authors’ work employs SQL queries instead of pandas to overcome the speed, storage, and scalability limitations of data-frames. The Query Agent Q_Agent generates a SQL query q_ij and resulting tables R_ij (q_ij(D) = R_ij) to answer the subquestions using the database information and schema. Queries are then verbalized verb(R_ij) in natural language with a prompt to answer s_ij. They are then validated using LLM evaluation functions that use an LLM score of answer relevance and answerability to remove questions with a score under a threshold.

Finally, the Summarization step summarizes the verbalized answers to generate the insight. Each generated insight undergoes post-processing to filter out potential hallucinations, following the procedure outlined in Algorithm 1 below. An evaluation function is used to measure the hallucination score of the language model by segmenting the insight into distinct claims and generating a score based on contradictions between them and the corresponding answers. The summary is refined iteratively using a self-correction mechanism until the hallucination score falls below a predefined threshold or the maximum number of iterations is reached.

Let’s move on to experiments. The study evaluates the proposed framework based on two primary metrics:

Insightfulness – A subjective metric that assesses the relevance, novelty, and actionability of generated insights. Insightfulness is measured using an Elo ranking system derived from pairwise human and LLM-based comparisons.

Correctness – Defined as the truth value of individual claims within an insight. Correctness is assessed by calculating the proportion of factually accurate claims within an insight.

The authors compare their approach against several baselines:

GPT-DA – ChatGPT's data analysis functionality, which generates insights from CSV files using pandas.

Quick Insights (Microsoft PowerBI) – An automated insight-generation tool that relies on pre-joined tables or individual table sampling.

Serial – A method where a portion of the database is serialized into an HTML table and fed into an LLM prompt due to context window constraints.

Additionally, the study includes ablation variants of the proposed model:

HLI (High-Level Insights) – The full version using both high-level and low-level questions.

HLI-WS (Without Summarization of Database Description) – Uses the complete database description instead of a short summary.

HLI-WH (Without High-Level Questions) – Generates insights using only low-level questions.

The evaluation is conducted on a mix of proprietary and public datasets, and uses GPT-4o as the base model for all methods to ensure a fair comparison, with insights being generated via SQL-based queries rather than pandas operations to enhance scalability.

Considering the results above in Figure 3 (on the private dataset), we find that the HLI model achieves the highest insightfulness score, outperforming all other baselines. The HLI-WH (Without High-Level Questions) variant performs worse than HLI, indicating that high-level question generation significantly contributes to insightfulness. Baselines like GPT-DA and Quick Insights perform the worst, showing that traditional automated insight-generation tools struggle to generate impactful insights.

Similarly, considering the LLM-based Elo ranking for the private dataset, the LLM-based eval confirms human rankings, with HLI achieving the highest insightfulness score, and GPT-DA and Quick Insights remaining at the bottom. Overall, both results demonstrate that the proposed HLI model consistently outperforms existing methods, and the inclusion of high-level questioning and summarization techniques significantly boosts insight quality.

Next, considering the correctness metric, Figure illustrates that Quick gets perfect correctness across all databases due to its design. HLI-WS and HLI show slightly lower results, but still consistently correct overall; HLI-WH has a slightly lower correctness. Finally, Serial and GPT-DA get the lowest scores, with high variability. Overall, method HLI-WH gives the best tradeoff between correctness and insightfulness over most databases. This can be seen in Figure 7 below, which represents the performance of each model on a database as a point in a plot, where the x-axis corresponds to the insightfulness and the y-axis to correctness.

In closing this musing, while the work is not overly complicated or novel, it is very pragmatic. The authors present a novel method for generating insights from multi-table databases. The proposed architecture formulates high-level questions, decomposes them into simpler subquestions, retrieves answers using automated SQL queries, and synthesizes the results into a final insight. The approach was evaluated for both insightfulness and correctness through human and LLM-based assessments, comparing its performance against three baselines and two architectural variations. The findings demonstrate that this method consistently outperforms existing approaches, achieving superior and more reliable results across both evaluation metrics.

The work is not without its limitations, however. Generated insights are not always perfectly correct, which in some contexts may lead to unexpected decisions. This effect can be alleviated by reducing database complexity or with future models that have better results on the Text-to-SQL task. Additionally, the architecture of the model involves a lot of LLM calls, which can have downsides in terms of cost. So this is a promising step, but there’s plenty of scope for follow-on research and some nice industrial applications.