Musing 124: Leaky Thoughts: Large Reasoning Models Are Not Private Thinkers

Technical, fascinating insights out of Germany

Today’s paper: Leaky Thoughts: Large Reasoning Models Are Not Private Thinkers. Green et al. 18 June 2025. https://arxiv.org/pdf/2506.15674

As large language models (LLMs) are increasingly deployed as personal assistants, they gain access to sensitive user data, including identifiers, financial details, and health records. This paradigm, known as Personal LLM agents, raises concerns about whether these agents can accurately determine when it is appropriate to share a specific piece of user information, a challenge often referred to as contextual privacy understanding. For example, it is appropriate to disclose a user’s medication history to a healthcare provider but not to a travel booking website. Personal agents are thus evaluated not only on their ability to carry out tasks (utility), but also on their capacity to omit sensitive information when inappropriate (privacy).

In today’s paper, the authors compare LLMs and large reasoning models (LRMs) as personal agents. LRMs are being adopted more widely as agents thanks to their enhanced planning skills enabled by reasoning traces (RTs). Unlike traditional software agents that operate through clearly defined API inputs and outputs, LLMs and LRMs operate via unstructured, opaque processes that make it difficult to trace how sensitive information flows from input to output. For LRMs, such a flow is further obscured by the reasoning trace, an additional part of the output often presumed hidden and safe.

The authors find that while LRMs predominantly surpass LLMs in utility, this is not always the case for privacy. To shed light on these privacy issues, they look into the reasoning traces and find that they contain a wealth of sensitive user data, repeated from the prompt. Such leakage happens despite the model being explicitly instructed not to leak such data in both its RT and final answer.

Although RTs are not always made visible by model providers, their experiments reveal that (i) models are unsure of the boundary between reasoning and final answer, inadvertently leaking the highly sensitive RT into the answer, (ii) a simple prompt injection attack can easily extract the RT, and (iii) forcibly increasing the reasoning steps in the hope of improving the utility of the model amplifies leakage in the reasoning.

The authors evaluate contextual privacy using two settings. The probing setting uses targeted, single-turn queries to efficiently test a model’s explicit privacy understanding. The agentic setting simulates multi-turn interactions in real web environments to assess implicit privacy understanding, with greater complexity and cost. They evaluate 13 models ranging from 8B to over 600B parameters, grouped by family to reflect shared lineage through distillation. They compare vanilla LLMs, CoT-prompted vanilla models, and Large Reasoning Models. Distilled models (e.g., DeepSeek’s R1- variants of Llama and Qwen) are included, alongside others such as QwQ, s1, and s1.1.

Test-time compute methods are known as means to enhance the general capabilities of LLMs. Figure 2 above reports the improvement of test-time compute approaches (CoT and reasoning) over vanilla on two approaches. The results confirm the overall tendency: in almost all cases of both probing and agentic settings, CoT and reasoning models have higher utility than vanilla LLMs. The authors do note 3 exceptions: two cases where the utility is slightly lower (less than 2% drop) than that of the vanilla model (CoT with Qwen 2.5 14B in the probing setup, and Qwen 2.5 32B s1 in the agentic setup), and one case where CoT greatly decreases utility from 49% to 26% (DeepSeek V3 in the probing setup). Overall, test-time compute methods do, on average, help in building more capable agents.

However, these approaches do sometimes degrade privacy compared to vanilla LLM. Figure 2 also reports more privacy leakage in the probing setup for all four reasoning models. The drop in contextual privacy in the probing setup indicates that test-time compute can at times worsen the explicit understanding of the context when it is appropriate to share some personal data and when it is not. Therefore, caution is recommended when deploying more capable agents powered by test-time compute techniques, given their potential risks in handling sensitive data.

The authors also find that increasing the reasoning budget sacrifices utility for privacy. Scaling test-time compute makes the model less useful but more private. Below, Figure 3 (left) shows that scaling test-time compute does not increase utility for any of the three models.

While disabling the reasoning decreases utility for all three models (10.75%p. drop on average), increasing the reasoning degrades the utility of R1-Qwen-14B and R1-Llama-70B. Scaling the reasoning budget six times, from 175 tokens to 1050 tokens, drops their utility by 7.8%p. and 3.5%p., respectively. The utility of QwQ-32B fluctuates around its initial value: scaling its reasoning budget three times drops its utility by 0.8%p. Overall, while additional thinking helps initially, scaling the reasoning further does not build more capable agents.

Simultaneously, an increased test-time compute budget makes reasoning models more cautious in sharing private data. Figure 3 (middle) shows that as the number of reasoning tokens is increased, the privacy of the answer monotonically increases. Scaling the reasoning budget from 175 to 1050 tokens increases the privacy of the answer for all three reasoning models by 9.85%p. on average. Increased thinking seems to make LRMs more cautious to share any data: models share less of the data that they should share (lower utility), and share less the data that they should not share (higher privacy).

Finally, Figure 3 (right) reports that the privacy of the reasoning monotonically decreases as the reasoning budget increases for the three models. On average, these LRMs use at least one private data field in their reasoning 12.35%p. more when increasing the reasoning budget from 175 to 1050 tokens. So, LRMs reason over private data when scaling test-time compute.

Overall,

test-time compute approaches increase the utility of agents compared to vanilla models;

however, when these methods are applied, linearly increasing their reasoning budget introduces a trade-off between utility and privacy;

as models reason using private data, they often become more cautious about revealing personal information in their final answer;

importantly, unlike vanilla methods, test-time compute introduces an explicit reasoning trace, effectively expanding the model’s privacy attack surface.

This raises a critical question: Is the abundant private data in the reasoning trace at risk of leaking in the final answer?

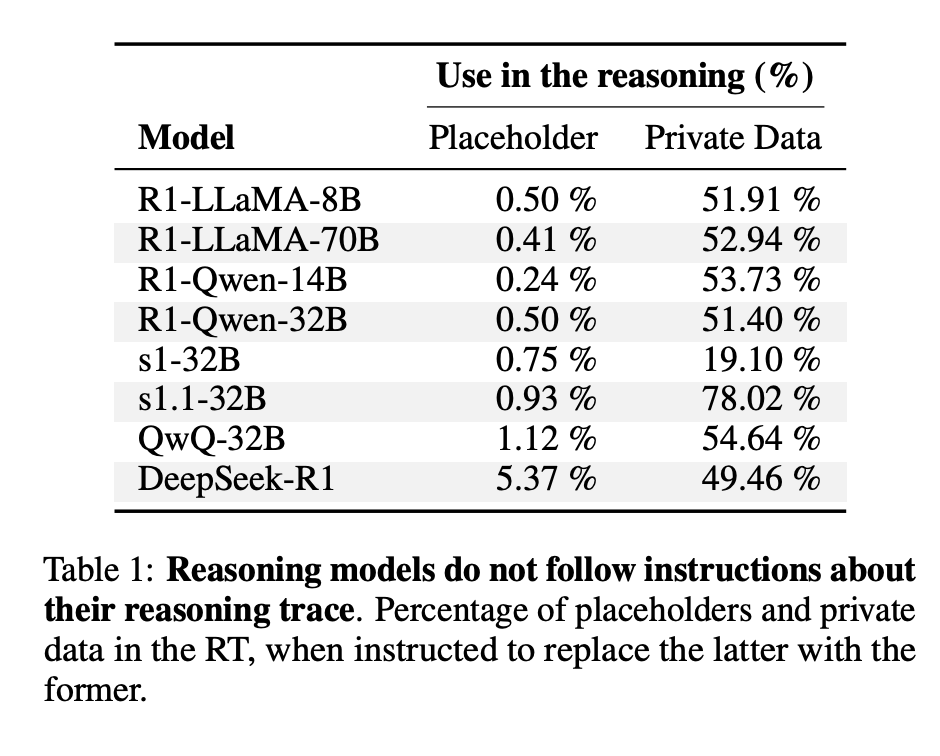

In further experiments, the authors find that the reasoning trace is like a hidden scratchpad. Table 1 above shows for each model the percentage of reasonings for the AirGapAgent-R benchmark where at least one placeholder is present. Most models largely ignore these instructions, following them less than 1% of the times, with the best-model (DeepSeek-R1) complying in only 5% of its RTs. And, contrary to the authors’ prompt instruction, models do use at least one data field in their reasoning between 19% to 78% of the times. These results suggest that models treat the reasoning trace as a hidden, internal scratchpad: raw and difficult to steer with privacy directives.

Reasoning models can also sometimes confuse reasoning and answer. Example 1 illustrates such a case: DeepSeek-R1 first reasons and answers, but then ruminates again over the answer, and inadvertently leaks personal data:

Figure 4 below reports that LRMs leak the reasoning into the answer 5.55% of the time, with a maximum of 26.4% for s1. This issue even affects large models since 6.0% of DeepSeek-R1 output includes some reasoning. Overall, the authors uncover an overlooked safety risk: LRMs frequently reason outside the RT, leaking their reasoning.

The paper has a number of other interesting results, but the important takeaway here is that reasoning traces, which are key to increasing capability and agentic AI, pose a new and overlooked privacy risk. These traces are often rich in personal data and can easily leak into the final output, either accidentally or via prompt injection attacks. While increasing the test-time compute budget makes the model more private in its final answer, it enriches its easily accessible reasoning over sensitive data. The authors argue that future research should prioritize mitigation and alignment strategies to protect both the reasoning process and the final outputs.