Musing 129: Voice-based AI Agents: Filling the Economic Gaps in Digital Health Delivery

Timely paper out of IBM T.J. Watson Research Center and others

Today’s paper: Voice-based AI Agents: Filling the Economic Gaps in Digital Health Delivery. Wen et al. 22 July 2025. https://arxiv.org/pdf/2507.16229

Healthcare systems worldwide face growing challenges in allocating limited medical resources to meet increasing demand. Traditional healthcare delivery models, centered on episodic patient-provider interactions, often result in significant gaps in continuous care, particularly in preventive health monitoring and chronic disease management. The integration of voice-based AI agents in healthcare presents a transformative opportunity to bridge economic and accessibility gaps in digital health delivery. Today’s paper explores the role of large language model (LLM)-powered voice assistants in enhancing preventive care and continuous patient monitoring, particularly in underserved populations.

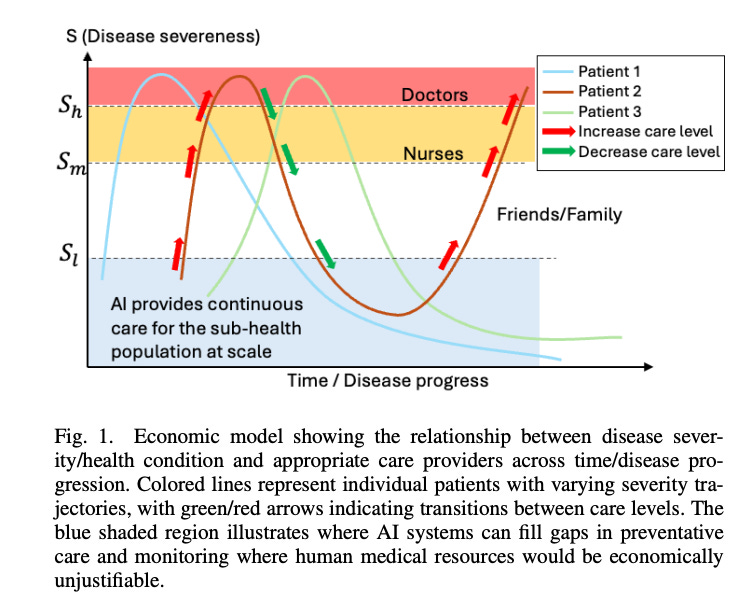

Let’s get started first with the economics. The figure below demonstrates how AI can efficiently fill care gaps, particularly during lower-severity periods when human medical resources would be economically unjustifiable yet monitoring remains beneficial. This model aligns with the concept of risk stratification in healthcare economics, where resources are allocated according to patient risk levels and expected benefits. It allows healthcare systems to maximize the utility of scarce physician and nursing resources by focusing them on high-severity cases while deploying AI for continuous monitoring of patients with less severe conditions.

LLM-driven voice bot technology offers a compelling solution to replace traditional interactive voice response (IVR) systems, which are often plagued by frustrating menu-driven interactions (“press 1 for X, press 2 for Y”) that can drive users to bypass the system completely. These legacy systems are due to limitations in earlier natural language processing, which could only handle basic speech recognition and simple intent mapping. Engineers were forced to create rigid menu structures that corresponded to specific business logic, resulting in rule-based systems with minimal awareness and personalization of the context. As organizations expanded services, these menu structures became increasingly complex and unintuitive. Users faced multiple layers of options, making voice interfaces less appealing than web or text alternatives.

LLM-driven systems transform this experience by enabling natural language interactions. Patients can simply state their needs, and AI determines the appropriate service, asks relevant follow-up questions, and provides personalized assistance, eliminating the need for predefined menu navigation. This approach improves the user experience while improving accessibility for those with limited technological knowledge or physical limitations.

The lack of scalable models for preventive care often leads to delayed diagnoses, costly emergency treatments, and preventable hospitalizations—a phenomenon commonly referred to as the “prevention paradox.” AI-powered voice agents present a promising alternative by offering continuous patient engagement at significantly lower costs compared to traditional human-led care.

Several economic advantages showcase the potential of AI in healthcare delivery:

Economies of scale: Once deployed, AI voice assistants can support millions of users with little additional cost, as fixed infrastructure expenses are distributed across a broad patient base.

Round-the-clock availability: These systems operate 24/7, removing the time constraints associated with human healthcare providers and reducing the opportunity costs of limited care access.

Consistency in service: AI systems deliver standardized patient interactions, avoiding the variability caused by human fatigue, time pressure, or inconsistent workflows.

Proactive data collection: By continuously monitoring patient symptoms, AI agents facilitate early intervention and generate structured data that can be used by healthcare professionals to improve outcomes and reduce information gaps.

Rapid knowledge updates: New medical guidelines, regulatory recommendations, and clinical best practices can be integrated quickly into AI systems via techniques such as fine-tuning or retrieval-augmented generation, ensuring that patients receive the most up-to-date information without the delays or costs associated with retraining human staff.

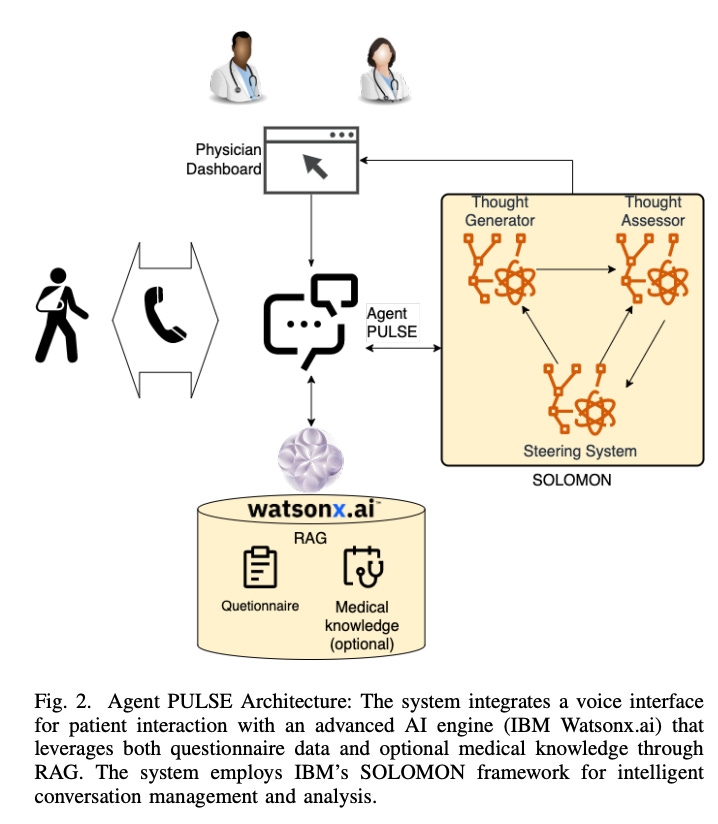

Agent PULSE, proposed by the authors, is a telephonic AI system designed to conduct medical surveys and monitor patient conditions through natural conversation. The core of the system is a dialogue management engine powered by prompt-tuned LLMs capable of understanding patient responses, asking follow-up questions, providing guidance and education, and escalating to human healthcare personnel when necessary.

It consists of several key components working in concert to deliver intelligent healthcare interactions: Voice Interface: The system is accessible through standard telephone lines, ensuring broad usability across different demographics, particularly beneficial for patients who may face literacy, technological, or financial barriers to smartphone or computer-based interfaces. Thanks to LLM integration, the voice interface supports multiple languages, providing access to diverse patient populations regardless of their native language:

The AI engine is built on IBM’s watsonx platform, which serves as the system’s core intelligence layer. This platform incorporates multiple advanced components, including watsonx.ai—a large language model (LLM) inference service that supports integration with various LLM providers and allows for customized configurations and fine-tuning. At the heart of its reasoning capabilities is SOLOMON, IBM’s proprietary multi-agent framework designed to manage conversations intelligently and analyze unstructured patient dialogue. SOLOMON automatically extracts structured data from natural language exchanges, transforming free-form conversations into standardized questionnaire responses that can be easily reviewed by care teams. Additionally, the system leverages Retrieval-Augmented Generation (RAG) to combine these structured inputs with relevant medical knowledge, enabling informed and context-aware responses.

The physician dashboard provides a comprehensive interface that enables healthcare providers to efficiently manage and customize patient interactions. Through this platform, providers can organize calling schedules for large patient cohorts, optimizing resource allocation and ensuring timely follow-ups. The dashboard also allows for the customization of survey parameters such as language preferences, questionnaire content, time zones, and other logistical details to better accommodate individual patient needs. Providers can review call results and survey summaries automatically generated by the SOLOMON system, using these insights to inform care interventions. Additionally, the dashboard supports longitudinal tracking of patient progress through automated trend analysis based on repeated assessments over time.

The authors also describe a pilot study on patient engagement to demonstrate the utility of the approach. Prior to implementing Agent PULSE, Morehouse School of Medicine (MSM) faced significant challenges in monitoring patients’ conditions between clinical visits. Initially, MSM employed two nurses to conduct individual follow-up calls with patients. However, this approach proved costly and unsustainable, as the nurses quickly experienced burnout due to the high volume of calls. To improve scalability, MSM transitioned to a group-based model using Zoom meetings, where multiple patients simultaneously shared their disease progress with the care team. While this approach allowed providers to reach more patients, it required individuals to discuss personal symptoms and health concerns in the presence of other patients, raising privacy concerns and potentially limiting disclosure of sensitive information.

These challenges highlight the economic gap in healthcare delivery discussed earlier in this paper—where human-led monitoring becomes unsustainable at scale. The cultural and linguistic barriers faced by human providers further exacerbate these inefficiencies, as healthcare systems cannot economically justify hiring culturally matched staff for every patient subgroup. Voice-based AI agents like PULSE represent a potential solution by offering continuous, cost-effective patient monitoring while reducing the burden on healthcare providers and transcending cultural barriers through consistent, nonjudgmental interactions.

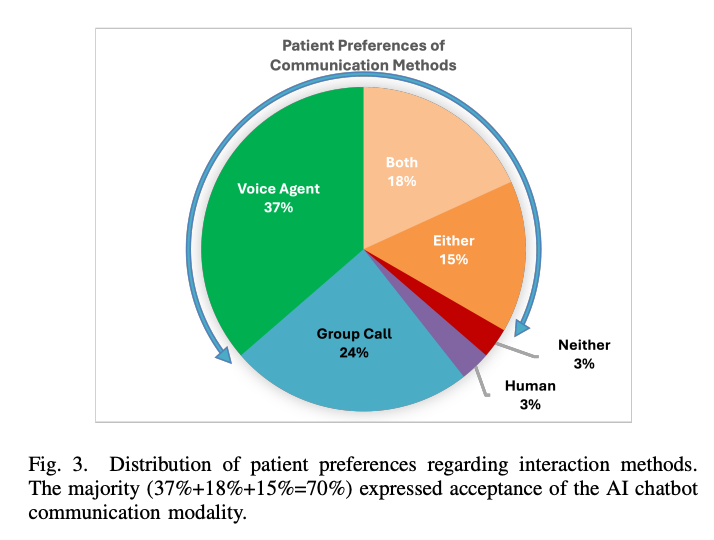

The authors’ findings revealed encouraging patient receptivity to AI-driven healthcare interactions. As shown in Figure 3 above, 37% of patients preferred the AI chatbot over other methods, while 24% favored the Zoom group approach primarily for its social interaction component. Notably, 18% of patients recognized value in both approaches, suggesting they serve complementary purposes, while 15% expressed no strong preference between the methods. Only 3% specifically preferred human interaction, and another 3% disliked both approaches.

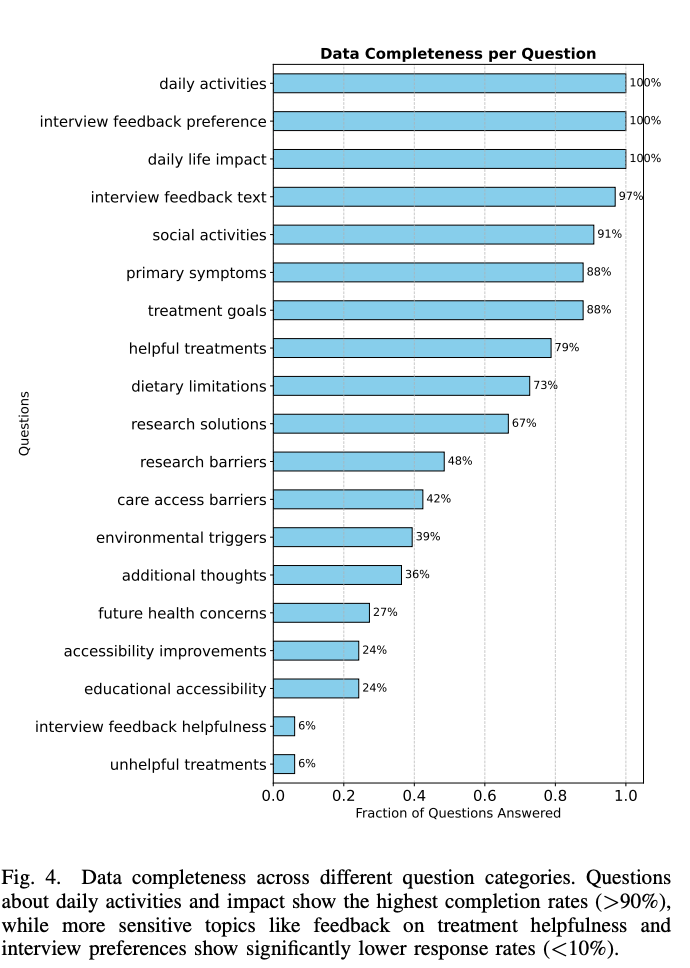

Analysis of response completeness (Figure 4 below) revealed significant variations across question categories. Questions about daily activities and daily life impact achieved the highest completion rates (94.4%), likely because these topics directly relate to patients’ symptoms and well-being—areas where patients have strong motivation to provide comprehensive information. In contrast, questions about research solutions, environmental triggers, and treatment feedback showed substantially lower completion rates, particularly those appearing later in the survey.

The authors’ experience with Agent PULSE provides empirical support for the economic model presented earlier. The pilot study clearly demonstrated that voice-based AI agents can effectively fill gaps in care delivery, particularly for routine monitoring between clinical visits. The high acceptance rate among patients (70% expressing comfort with AI interaction) suggests that such systems can achieve the necessary user engagement to deliver economic benefits in real-world settings.

The authors also identify some technical challenges, followed by a roadmap. One of the most important factors affecting patient experience with voice AI systems is response time and consistency. When AI systems take too long to respond or fail to remember previous parts of a conversation, patients may become frustrated and disengage. The authors’ work identified significant opportunities to improve how AI systems manage conversations through better memory management techniques. These improvements could reduce AI response times by 2-3 times while maintaining natural flow in conversations. For patients, this means fewer awkward pauses and a more natural interaction similar to speaking with a human healthcare provider.

Also, to maximize effectiveness, voice-based AI health agents must adapt to individual patient communication styles, preferences, health literacy levels, and cultural contexts. Current methods predominantly employ static prompts that don’t fully exploit LLM adaptive capabilities. A comprehensive personalization framework should include: dynamic patient profiles that evolve based on interaction history; language complexity adjustment matching health literacy levels; cultural competence in conversational strategies; and personalized timing and frequency of outreach based on patient preferences and response patterns. These capabilities would significantly improve engagement, trust, and sustained patient participation while ensuring comprehension and adherence across diverse populations.

In closing the musing, it’s important to end with a note on the broader ethical considerations, such as ensuring AI complements human connection, seamless escalation to human providers, and vigilant monitoring for algorithmic bias, especially given historical disparities, are critical. Voice-based AI offers an inclusive path to democratizing healthcare, transforming care delivery by extending provider reach to underserved populations and addressing economic constraints. Future research should target longitudinal outcomes, remote monitoring integration, and condition-specific applications.

Most importantly, as the authors themselves note, success requires multidisciplinary collaboration: clinicians contributing expertise, technologists developing robust systems, policymakers establishing supportive frameworks, and patients providing essential feedback. This collaborative approach will maximize voice-based AI’s potential as an entry point for sustainable healthcare delivery.