Musing 13: Can AI Models Appreciate Document Aesthetics?

A preprint out of Thomson Reuters on whether state-of-the-art document AI models effectively capture the nuances of document aesthetics.

Today’s paper: Can AI Models Appreciate Document Aesthetics? An Exploration of Legibility and Layout Quality in Relation to Prediction Confidence. Mar. 27, 2024. https://arxiv.org/pdf/2403.18183.pdf

This paper was presented at the Workshop on Psychology-informed Information Access Systems (PsyIAS) and explores the extent to which state-of-the-art (SOTA) document AI models capture nuances of document aesthetics, particularly focusing on legibility and layout quality. We all know the importance of these aspects when we’re reviewing documents. No one likes to read a document that is aesthetically displeasing and takes ‘work’ to slog through. As I like to say, “the easier a document or presentation is on the eyes and mind, the harder it was to write and put together.”

All of that being said, this musing might be harder to read if you’re not familiar with some of the jargon in document aesthetics. Probably because it took me time to understand it as well, I haven’t been able to distill it at the level I would like to. Nevertheless, I’ll try and give it a shot.

The authors specifically investigate how aesthetic elements like noise, font-size contrast, alignment, and complexity affect AI model confidence through correlational analysis. Their exploratory model analysis sheds light on the interaction between document design and AI interpretation, aiming to bridge the gap between human cognitive processes and AI's understanding of aesthetic elements. The key contributions of this paper include:

Hypothesis Generation on Document Design Effects: The paper compiles relevant research and formulates hypotheses on how different aspects of document design, including legibility and layout quality, influence document understanding in AI models.

Quantitative Measures Related to Document Aesthetics: The authors develop and curate a set of quantitative measures to assess the impact of document aesthetics on model confidence, contributing tools for further research in document AI aesthetics.

Correlational Model Analysis: Through an exploratory analysis, the paper evaluates the correlation between document aesthetics and model confidence, providing insights into how aesthetic elements impact AI models' performance.

The experimental findings of the paper are anchored in the relationship between various aesthetic measures and the confidence levels of AI models, specifically focusing on document aesthetics elements such as image noise, font size, contrast, alignment, and layout complexity. The authors opted for Spearman's rank correlation coefficient to analyze these relationships due to the non-normal and non-linear nature of the data, and they meticulously removed outliers to mitigate the influence of factors like OCR errors and data inaccuracies. Here's a summary of the key findings:

Image Noise: The study utilized No-Reference Image Quality Assessment techniques to evaluate image noise, particularly focusing on high-frequency components as a marker of noise. The analysis aimed to understand how noise levels in document images impact model confidence, employing techniques like the 2D Discrete Fourier Transform to quantify noise.

Font Size and Contrast: The researchers developed a measure to assess the distraction effect related to font size and contrast, comparing sizes of two nearest elements to understand how variations in font size impact model attention and confidence.

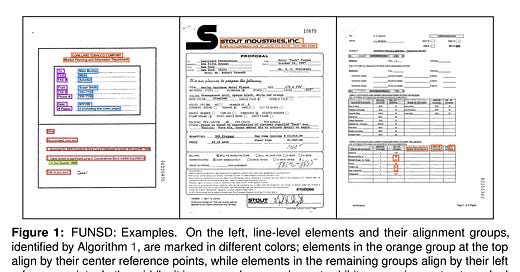

The outcomes of these exploratory analyses underscored the complexity of how document aesthetics influence AI model predictions. While the paper does not provide exhaustive conclusions, there are some interesting qualitative results that I’ll focus on here. One of the datasets on which they ran experiments was FUNSD, which consists of 199 (149/50 for training/test; 30 samples from the training set are used for validation) noisy, scanned forms and a total of 9,707 annotated semantic entities. This dataset is widely used as a benchmark dataset for tasks such as OCR, spatial layout analysis, and entity extraction/linking.

Another data set is IDL, a vast collection of documents created by industries which influence public health. It has fostered multiple datasets for VrDU tasks, such as RVL-CDIP and DocVQA. The authors focused on the task of document page classification, i.e., labeling pages originating from documents with a single class, using a subset of about 15K (80% / 10% / 10% for training / validation / test) OCR’d documents from OCR-IDL. The conclusions are not completely straightforward:

My thoughts:

I always appreciate it when the authors have a clear research question (which aesthetic elements are perceived by the multimodal document AI systems when making predictions?) and state and explore multiple hypotheses in the preprint.

To their credit, the authors recognize the complexity of their own conclusions and don’t opt for the easy way out in stating or explaining their experimental findings. However, on that note, I feel that the captions of the figures could have been clearer.

The authors’ work opens pathways for further research by demonstrating that aesthetic elements like font size contrast and image noise do have measurable impacts on model behavior, albeit in nuanced and variable ways.

The most important aspect of the paper is the problem/topic it’s choosing to address. It’s always refreshing to see something like this rather than (yet) another predictive problem. I hope we’ll see more work like this out of this group.