Musing 138: Advances in Large Language Models for Medicine

Interesting paper out of University of Illinois at Chicago, Jinan University and Guangdong Eco-Engineering Polytechnic.

Today’s paper: Advances in Large Language Models for Medicine. Kan et al. 23 Sep 2025. https://arxiv.org/pdf/2509.18690

The healthcare industry stands at the precipice of a technological revolution. Large language models (LLMs) — the same artificial intelligence systems powering conversational tools like ChatGPT — are rapidly reshaping how medical professionals diagnose diseases, develop treatments, and deliver patient care. Recent research reveals just how dramatic this transformation has become.

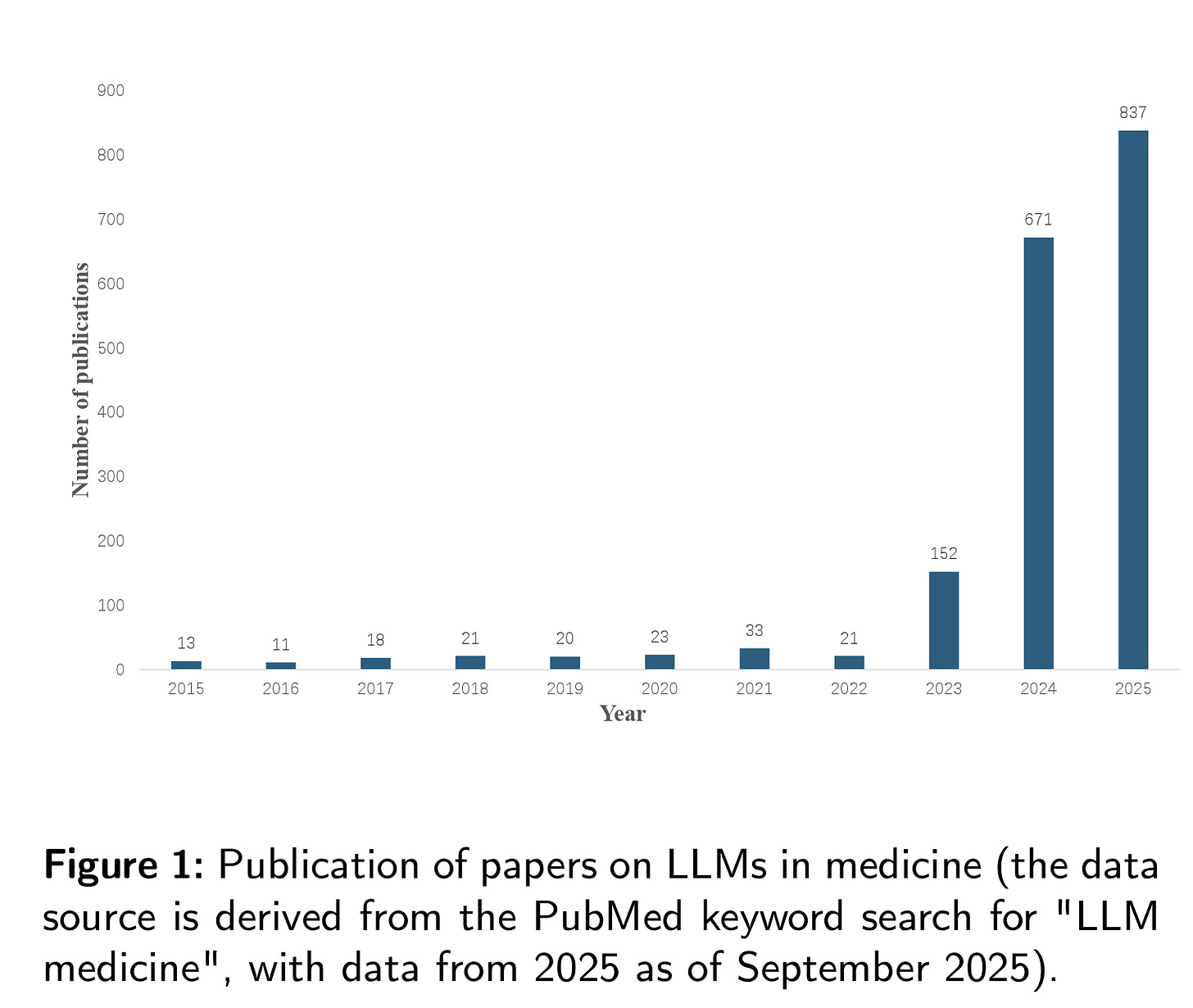

The numbers tell a compelling story. As shown in Figure 1 below, scientific publications on medical LLMs have exploded from a mere handful in 2015 to over 800 papers in 2024 alone. The exponential growth reflects not just academic interest, but a fundamental shift in how the medical community views AI’s potential to address longstanding healthcare challenges.

Modern medicine, despite its remarkable advances, faces persistent constraints that LLMs are uniquely positioned to address. Specialization has created silos where doctors excel in narrow domains but may struggle with complex cases requiring interdisciplinary knowledge. The sheer volume of medical literature, which is growing too rapidly for any individual to comprehensively track, means even the most dedicated clinicians can’t stay current with every relevant development in their field.

Medical LLMs offer a different paradigm. These systems can process vast amounts of medical literature, synthesize information across specialties, and provide comprehensive analysis that no single human expert could match. They’re not replacing doctors’ judgment, but rather augmenting human expertise with computational power that can identify patterns and connections across enormous datasets.

The development of medical LLMs follows three distinct technical approaches, each with unique advantages and resource requirements. Pre-training involves building models from scratch using massive medical datasets, an approach that yields highly specialized systems but requires substantial computational resources. Google’s PaLM model, for instance, reportedly cost between $25-30 million to train, while estimates suggest GPT-4’s development exceeded $100 million.

Fine-tuning offers a more accessible alternative, adapting existing general-purpose models like GPT or LLaMA for medical applications. This approach has produced notable successes, including models that can match or exceed human performance on medical licensing exams. The third path, prompting, requires no model modification at all, instead using carefully designed inputs to guide existing models toward medical tasks.

Each approach serves different needs. Resource-constrained organizations might opt for sophisticated prompting strategies, while major healthcare systems could invest in custom fine-tuned models. The key insight is that effective medical AI doesn’t require starting from scratch: strategic adaptation of existing models can yield remarkable results.

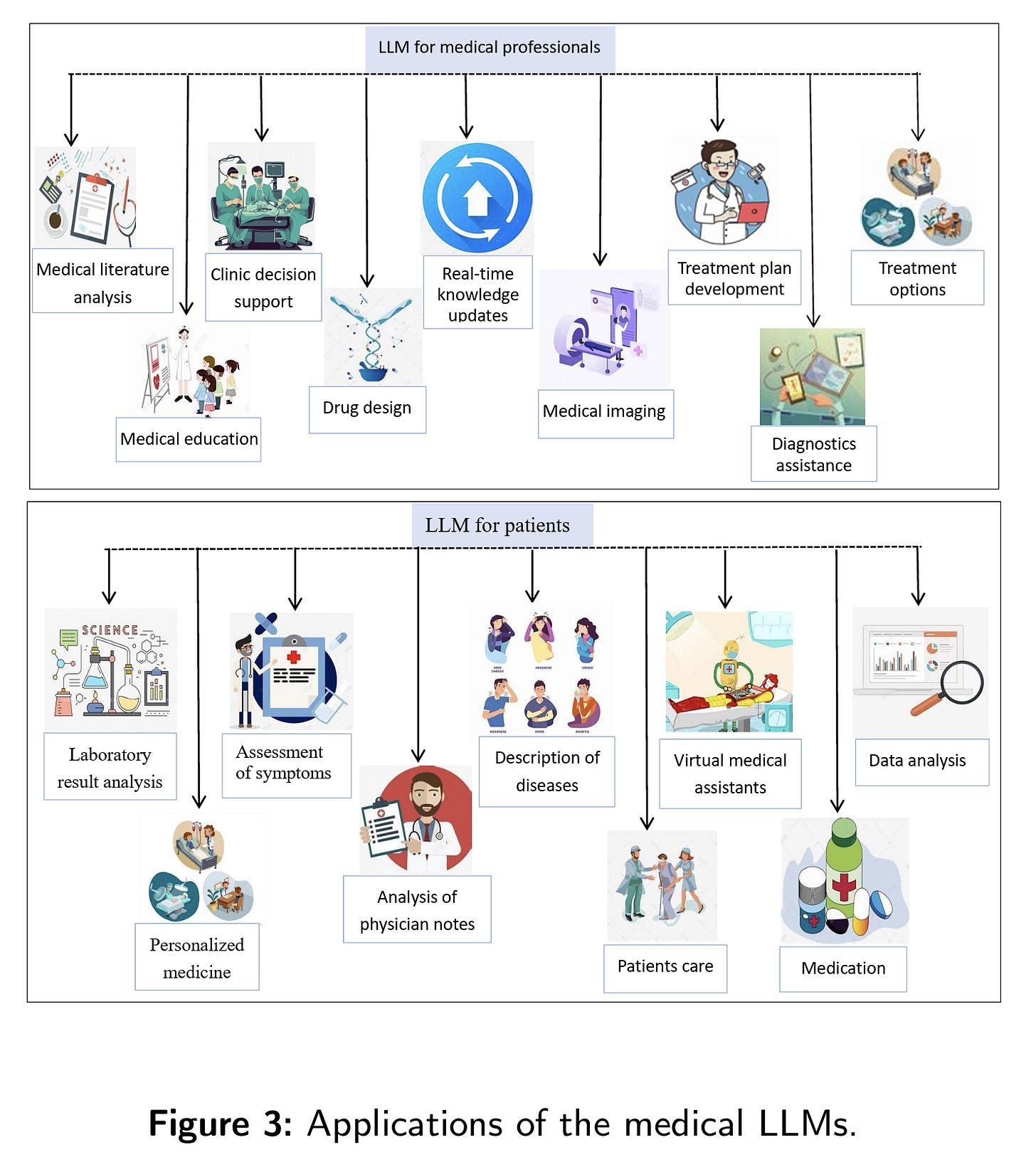

The scope of medical LLM applications extends far beyond simple question-answering systems. Figure 3 below illustrates this comprehensive landscape, revealing how these models are transforming virtually every aspect of healthcare delivery.

In clinical decision support, LLMs excel at synthesizing patient data, medical literature, and treatment guidelines to assist with diagnosis and treatment planning. They can rapidly analyze complex cases, suggest differential diagnoses, and recommend evidence-based treatment protocols. For personalized medicine, these systems can integrate genomic data, patient history, and lifestyle factors to develop truly individualized treatment strategies.

Medical education represents another transformative application. LLMs can create realistic patient simulations, generate diverse case studies, and provide personalized learning experiences that adapt to individual students’ knowledge gaps. Unlike traditional educational resources, these AI tutors never tire, can handle unlimited student queries, and stay current with the latest medical knowledge.

Perhaps most intriguingly, LLMs are accelerating drug discovery and development. By analyzing molecular structures, predicting drug interactions, and identifying novel therapeutic targets, these models can significantly compress the typically decade-long journey from laboratory to clinic. Early research suggests they can reveal drug targets that traditional methods might miss entirely.

Assessing medical LLM performance requires more nuanced approaches than traditional AI evaluation. Machine-based metrics (accuracy scores, F1 measures, and automated benchmarks) provide baseline performance indicators but fail to capture critical dimensions like safety, empathy, and practical utility in real clinical settings.

Human-centered evaluation has emerged as equally important, incorporating assessments from medical professionals and even using advanced LLMs themselves as evaluators. This dual approach recognizes that medical AI success depends not just on technical accuracy but on practical integration into complex healthcare workflows.

The evaluation challenge reflects a broader tension in medical AI: passing standardized medical exams doesn’t necessarily indicate readiness for real clinical practice. Complex professional skills e.g., clinical reasoning, patient communication, handling uncertainty, require more sophisticated assessment frameworks than simple question-answering tasks.

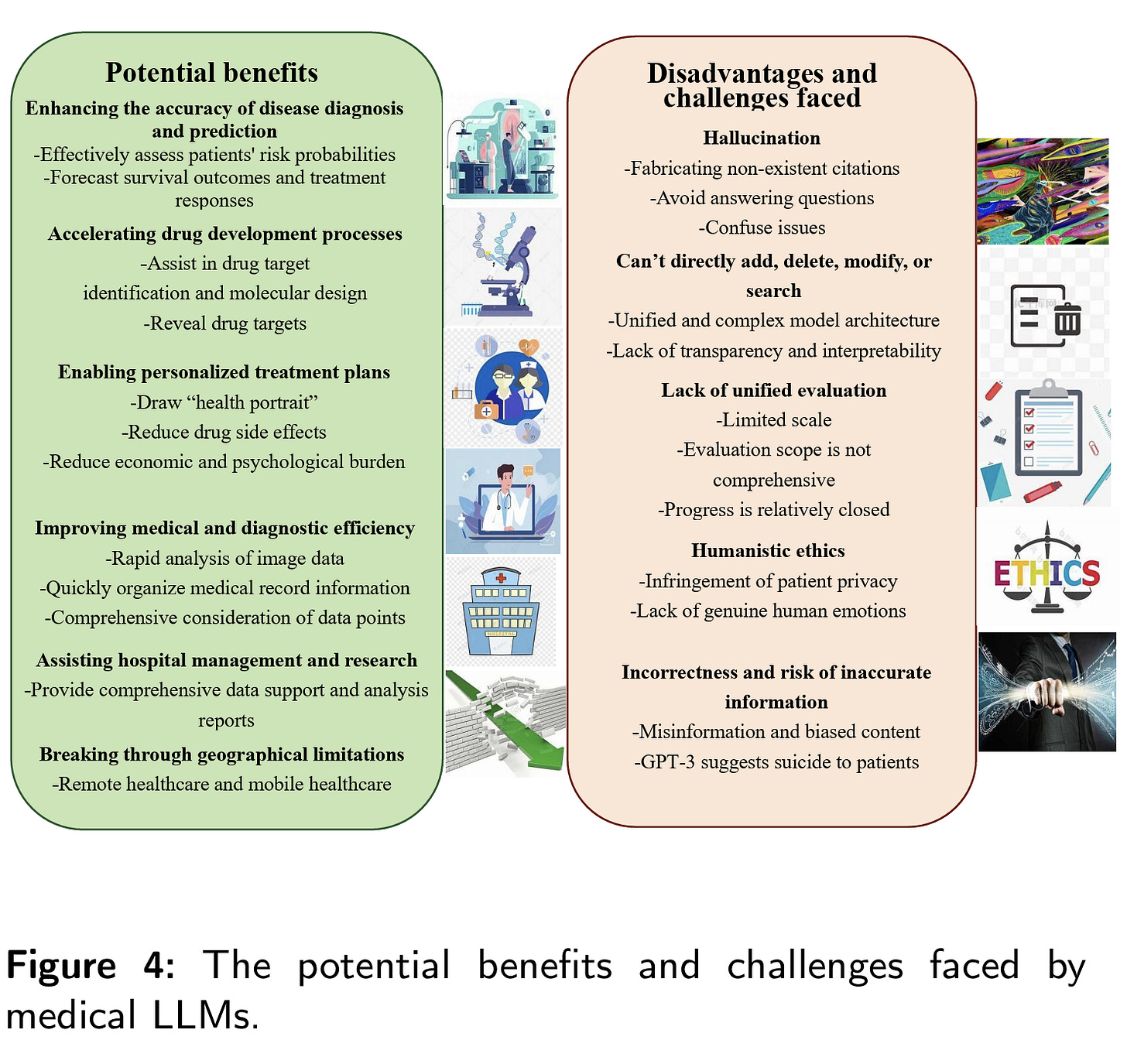

The potential benefits of medical LLMs are substantial. Figure 4 below captures this duality, showing how these systems can enhance diagnostic accuracy, accelerate research, and expand access to high-quality medical expertise across geographical boundaries. Rural clinics could access the same level of AI-assisted analysis available at major medical centers, potentially reducing healthcare disparities.

However, significant challenges accompany these opportunities. Hallucinations i.e., instances where models generate plausible but factually incorrect information, pose serious risks in medical contexts where accuracy is paramount. The infamous case of GPT suggesting suicide to a patient underscores how AI systems can produce dangerous recommendations despite their general competence.

Privacy concerns loom large, as medical LLMs require access to sensitive patient data for training and operation. The complexity of these systems also creates transparency challenges e.g., when an AI recommends a particular treatment, understanding the reasoning behind that recommendation becomes crucial for clinical acceptance and regulatory compliance.

Bias represents another critical concern. If training data contains historical biases in medical treatment or represents only certain populations, LLMs may perpetuate or amplify these inequities. Ensuring fair and equitable AI systems requires careful attention to data diversity and ongoing monitoring of model outputs across different demographic groups.

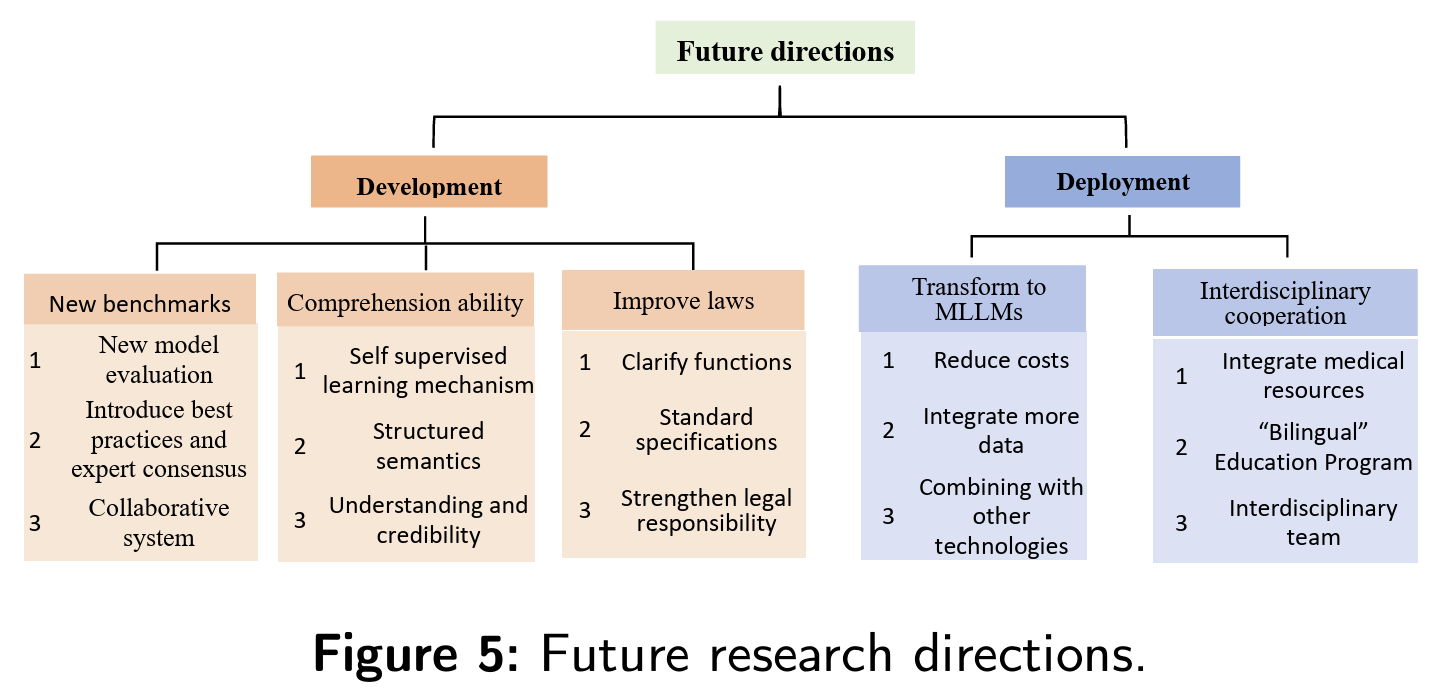

Future developments in medical LLMs point toward even more sophisticated capabilities. Figure 5 outlines key research directions, including the evolution toward multimodal systems that can process not just text but medical images, laboratory results, and physiological signals simultaneously.

The transition from text-only to multimodal models represents a particularly significant advancement. Current medical LLMs primarily process written information, but healthcare involves rich visual data (radiological images, pathology slides, surgical videos). Next-generation systems that can seamlessly integrate these different data types could provide more comprehensive clinical analysis.

Interdisciplinary collaboration emerges as another crucial development direction. The most effective medical AI systems will likely combine expertise from computer science, medicine, ethics, and regulatory affairs. This requires “bilingual” professionals who understand both medical practice and AI technology, a skill set that educational institutions are only beginning to address.

Enhanced evaluation frameworks represent another frontier. Traditional benchmarks focused on medical knowledge may give way to more sophisticated assessments that evaluate AI systems in realistic clinical scenarios, measuring not just accuracy but practical utility, safety, and integration with human workflows.

The explosive growth in medical LLM research and development reflects genuine excitement about these technologies’ transformative potential. However, realizing this potential requires careful attention to safety, equity, and integration challenges.

Success will depend not just on technical advancement but on developing appropriate regulatory frameworks, training healthcare professionals to work effectively with AI systems, and ensuring that benefits reach all populations rather than exacerbating existing healthcare disparities.

The medical LLM revolution is not a distant future possibility. It’s happening now. Healthcare organizations, medical professionals, and policymakers must engage thoughtfully with these technologies to harness their benefits while mitigating their risks. The goal isn’t to replace human medical expertise but to augment it in ways that improve patient outcomes, expand access to care, and advance medical knowledge.

In closing this musing, as this field continues its rapid evolution, the healthcare industry faces both unprecedented opportunities and significant responsibilities. The decisions made today about medical AI development, deployment, and governance will shape the future of healthcare for generations to come.