Musing 21: OSWORLD: Benchmarking Multimodal Agents for Open-Ended Tasks in Real Computer Environments

A cool paper out of the University of Hong Kong, CMU, Salesforce Research and the University of Waterloo.

Today’s paper: OSWORLD: Benchmarking Multimodal Agents for Open-Ended Tasks in Real Computer Environments. Xie et al. 11 Apr. 2024. https://arxiv.org/pdf/2404.07972.pdf

In AI research, when we’re discussing ‘multimodal agents’, we tend to think ‘game-playing.’ Which is fun, but not all that useful. Instead, wouldn’t it be great if we had agents that were more like us, and could do the kinds of things we can, such as work on a computer?

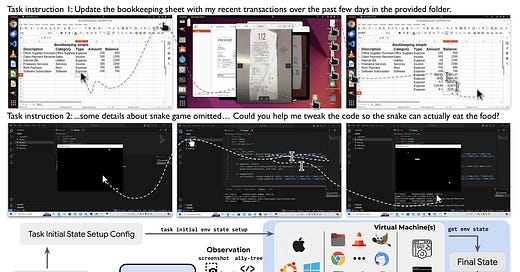

This is what the authors consider in this paper. They propose OSWORLD, which is a “first-of-its-kind scalable, real computer environment for multimodal agents”, supporting task setup, execution-based evaluation, and interactive learning across operating systems. It can serve as a unified environment for evaluating open-ended computer tasks that involve arbitrary apps. The authors also create a benchmark of 369 real-world computer tasks in OSWORLD with reliable, reproducible setup and evaluation scripts. Consider the figure below to see how cool this really is.

The OSWORLD environment uses a configuration file (see below) for initializing tasks (highlighted in red), agent interaction, post-processing upon agent completion (highlighted in orange), retrieving files and information (highlighted in yellow), and executing the evaluation function (highlighted in green). Environments can run in parallel on a single host machine for learning or evaluation purposes. Headless operation is supported.

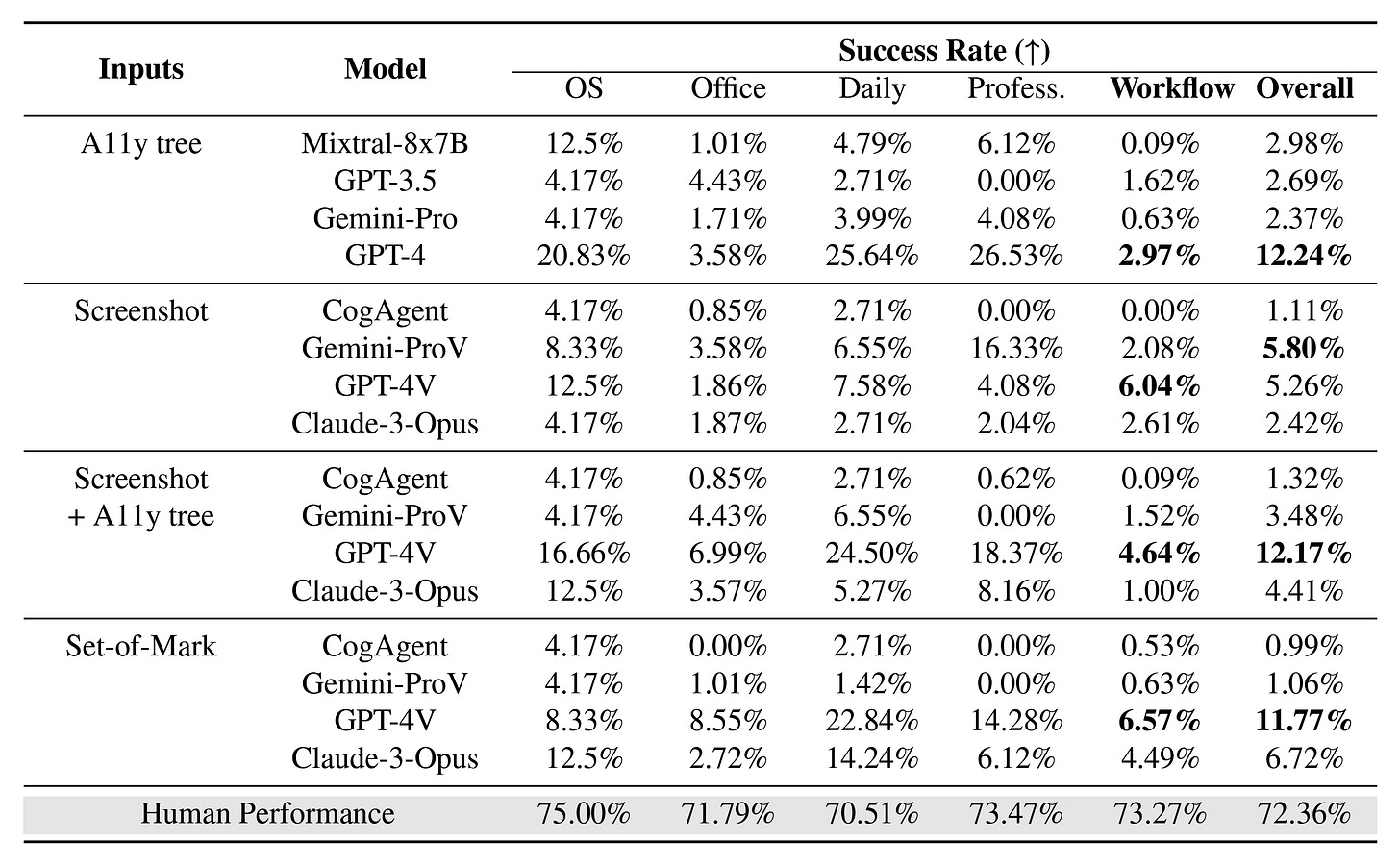

Because this is more of a benchmark/evaluation paper, the authors need to evaluate existing LLMs on this environment. And they do. They evaluate both LLM and VLM-based agent baselines, including the GPT-4V series, the Gemini-Pro models, and the Claude-3 Opus, as well as Mixtral and CogAgent from the open-source community.

Long story short: it will be a while before language models replace us on these types of problems. Detailed performance analysis is provided, showing that while humans can complete over 72% of the tasks successfully, the best-performing AI models achieve only around 12.24% success rate. This stark contrast highlights the current limitations of AI agents in handling complex, open-ended tasks in real computer environments. The experiments provide valuable insights into the specific challenges faced by AI agents, such as issues with GUI interactions and limited operational knowledge. These findings are critical for directing future improvements in AI research.

To encourage further research and development, the authors make the entire OSWORLD environment, including the tasks, evaluation scripts, and baseline model implementations, publicly available. So we could all try it out.

And on that note, have a great weekend everyone, and I’ll be back with a new substack next week.