Musing 40: How Far Are We From AGI?

A provocative paper on AGI out of UIUC, Chicago, UCSC, CMU and Johns Hopkins University

Today’s paper: How Far Are We From AGI? Feng et al. 16 May 2024. https://arxiv.org/pdf/2405.10313

AGI has moved well beyond speculative science fiction and is now a very real, if not inevitable, possibility. It might be achieved within this decade. The authors of today’s papers ask the question, how far are we from it? They’ve tried to answer the question by writing a 120-page book-chapter’ish survey. If you have the time, it’s worth the read, although the figures tell much of the story.

I’m going to try and parse out quotes and pieces from the paper that I think are meaningful. The more technical components require a deeper reading, and are not summarized as much in this musing.

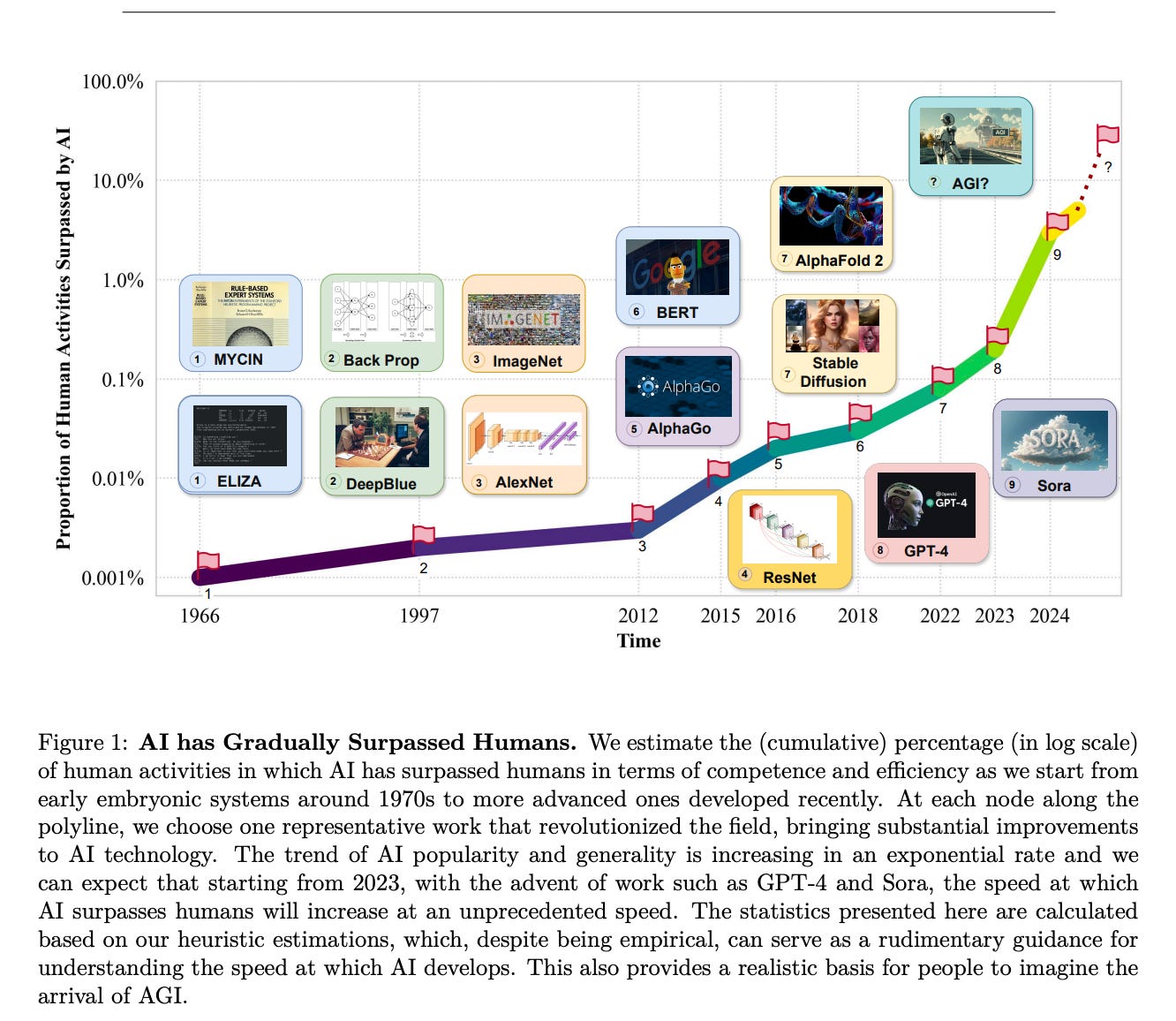

“AGI is Within Closer Reach than Ever As is summarized in Figure 1, the rapid development of AI has enabled its capabilities to surpass human activities in increasingly more fields, which indicates that the realization of AGI is getting closer. Hence, it is of great practical significance to revisit the question of how far we are from AGI and how can we responsibly achieve AGI by conducting a comprehensive survey that clearly establishes the expectation of future AGI and elaborates on the gap from our current AI development.”

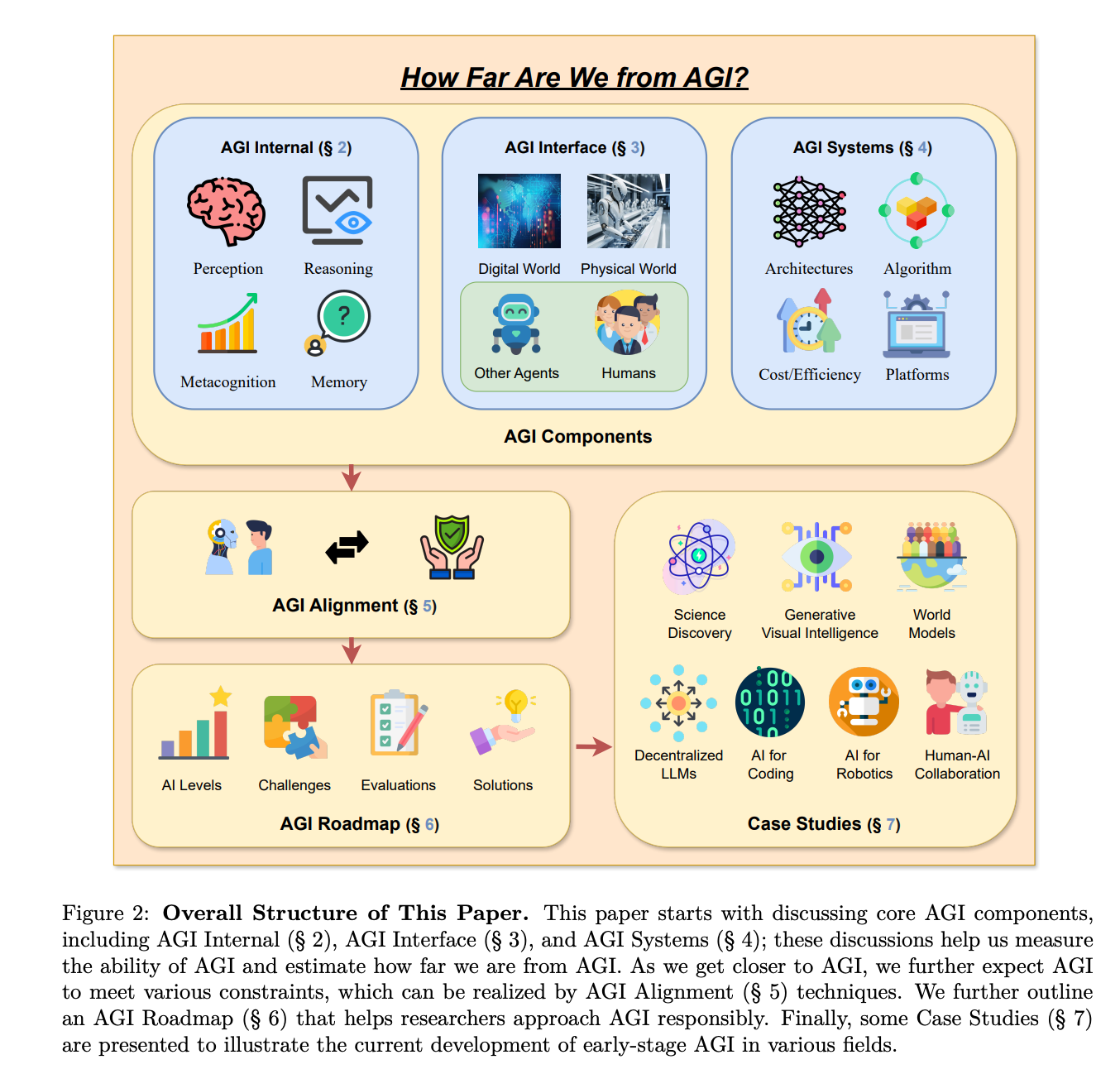

The paper is structured along the lines below:

“AGI-level Perception Current models of perception are still limited by their limited modality and lack of robustness. To address these limitations, we propose several potential future research directions:

The diversification of modalities is essential for integrating multiple data types and improving model capabilities. […]

Encouraging multi-modal systems to be more robust and reliable. […]

Explainable multi-modal models point out the direction for future improvement. […]”

“Substantial research indicates that reasoning capabilities have emerged in large machine-learning models […] including arithmetic, commonsense, symbolic reasoning, and challenges in both simulated and real-world settings.”

Considering the snippet above, I have to say that it should be interpreted with some nuance. For example, having led an effort in machine common sense, I can safely say that, for all their impressive performance, true common sense is still currently lacking in LLMs. That is not to say they haven’t become strikingly good at tests of common sense that previous models failed miserably at. Nevertheless, on deeper tests of common sense, LLMs make mistakes that they shouldn’t.

The authors do acknowledge some of the limitations of AI reasoning in LLMs:

“While language models fall short of consistent reasoning and planning in various scenarios, world and agent models can provide essential elements of human-like deliberative reasoning, including beliefs, goals, anticipation of consequences, and strategic planning. The LAW framework (Hu and Shu, 2023) suggests reasoning with world and agent models, with language models serving as the backend for implementing the system or its components.”

Moving on to the end of the paper, with about a hundred pages in between, the following paragraph summarizes the potential of AGI nicely:

“The pursuit of AGI is an extraordinarily challenging endeavor, fraught with immense complexities and obstacles. However, with the recent advancements in various aspects of AI research, the vision of achieving AGI has never been more vivid and tangible. Prominent figures in the AI community, such as Jensen Huang, Sam Altman, and Elon Musk, have expressed their confidence in the eventual realization of AGI, further fueling the enthusiasm and determination of researchers and engineers worldwide […] By prioritizing ethical considerations, collaborative efforts, and a commitment to the betterment of humanity, we can work towards a future in which AGI systems serve as powerful tools for solving complex problems, driving scientific discovery, and improving the quality of life for all.”

Amen.