Musing 52: 3D Building Generation in Minecraft via Large Language Models

A recently accepted paper in IEEE Conference on Games.

Today’s paper: 3D Building Generation in Minecraft via Large Language Models. Hu et al. 13 June 2024. https://arxiv.org/pdf/2406.08751

Procedural content generation (PCG) is the automated creation of game content such as environments and levels. As with so much else, LLMs have led to new applications in this field. These models play a vital role in meeting complex needs through human feedback. examples include researchers creating LLM-based innovative and playable levels for the game Sokoban, as well as MarioGPT, which allows users to input specific prompts like "some pipes, little enemies" to generate levels for Super Mario Bros. Unlike earlier methods that used a tokenizer, MarioGPT works directly with user prompts. Other researchers established a two-phase process to refine GPT-3, incorporating levels designed by humans and LLMs for training purposes. Additionally, in 2023, a competition named ChatGPT4PCG was organized to foster the creation of Angry Birds levels using LLMs. Despite these advancements, the focus remains predominantly on 2D level generation, with 3D game content generation still largely unexplored.

Today’s paper proposes a Text to Building in Minecraft (T2BM) model, which takes a step in this direction by using the capabilities of LLMs in 3D building generation considering elements like facade, indoor scene and functional blocks such as doors and beds. T2BM accepts simple prompts from users as input and generates buildings encoded by an interlayer, which defines the transformation between text and digital content. Based on T2BM, players or designers can construct buildings quickly without repeatedly placing blocks one by one, while the human-crafted prompt is not necessarily detailed. Experiments with GPT-3.5 and GPT4 demonstrate that T2BM can generate complete buildings, while aligning with human instructions.

T2BM can be described as follows: it receives a simple user input and outputs a complete building in Minecraft. Initially, it forwards the user’s description along with contexts like some detailed building description examples to an LLM for refinement. Then, the refined prompt is combined with a format example and background to structure the final instruction, according to which an LLM produces a building encoded by the interlayer. The interlayer’s purpose is to transform text to recognizable content and is designed in JSON to represent buildings in Minecraft. Discrepancies in this interlayer are corrected using a repairer. Finally, T2BM decodes the generated building in Minecraft. T2BM is composed of three core modules, namely input refining, interlayer, and repairing. These are nicely captured in the figure above.

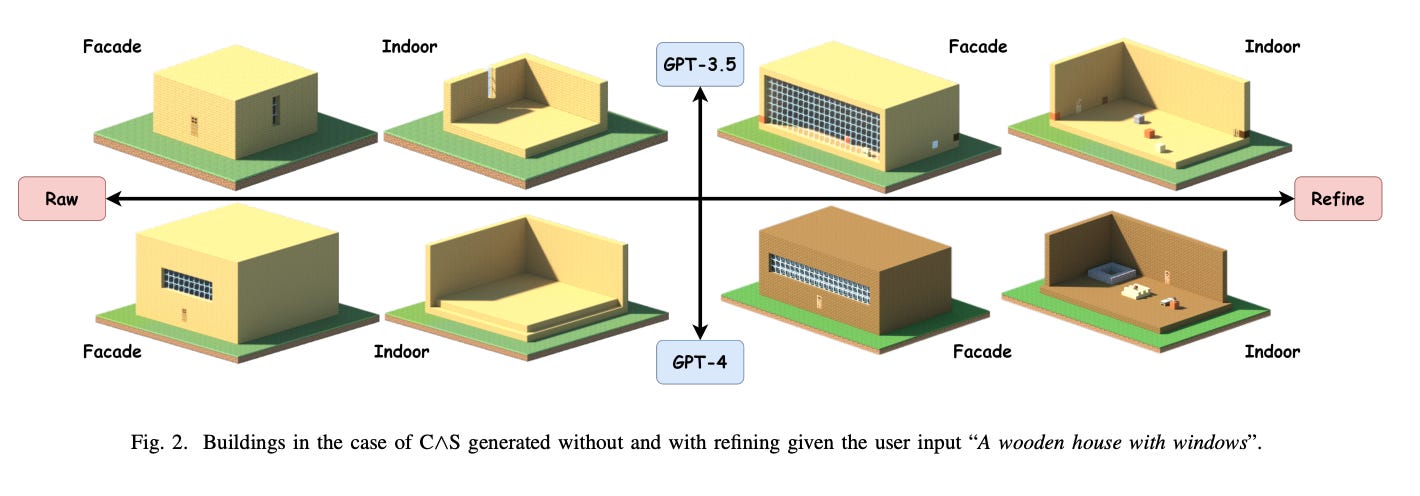

Experimentally, GPT-3.5 and GPT-4 are chosen for their superior abilities. Two settings are considered to examine the contribution of refinement: (i) raw: A simple raw user input is like “A wooden house with windows”; (ii) refined: A good refined description by LLMs after accepting user input. Both are evaluated 50 times.

Results of completeness and satisfaction constraints checking are shown in the table below. “C” denotes the ratio of complete outputs among all outputs. “S” denotes the ratio of outputs that satisfy the material constraint, i.e., the user’s input, among all outputs. Other logical combinations of C and S are also considered.

The results show that refinement helps tremendously when generating these environments. Moreover, GPT-4 performs better than GPT-3.5. The figure below shows buildings that met both completeness and satisfaction constraints (C∧S) generated by GPT-3.5 and GPT-4 with raw and refined prompts.