Musing 74: How will advanced AI systems impact democracy?

A unique multi-institutional paper out of the likes of OpenAI, Anthropic, Oxford, Harvard, and several others

Today’s paper: How will advanced AI systems impact democracy? https://arxiv.org/pdf/2409.06729

We’ve been on a hiatus for the last week, but glad to be back with this very interesting paper. I’ve been expecting something like this to come out for a while now, and it’s good to see that it has multi-institutional heft, including joint authorship from ‘rivals’ like OpenAI and Anthropic.

By ‘advanced AI systems’ it’s fairly obvious that the authors are really referring to LLMs and generative models like ChatGPT, including the newer versions on the horizon that can handle complex multi-modal data. Publicly available LLMs already have user bases thought to collectively exceed more than 100 million monthly users. If citizens are turning to LLMs like ChatGPT, Gemini, or Claude for information on current events, political controversies, and electoral decisions, even minor biases in these outputs could meaningfully influence the distribution of political beliefs within the population. Several studies have sought to quantify the extent of political bias in LLMs, often by presenting them with multiple-choice survey questions and analyzing the relative likelihood of each candidate response (e.g., option A versus option B) based on the model’s outputs.

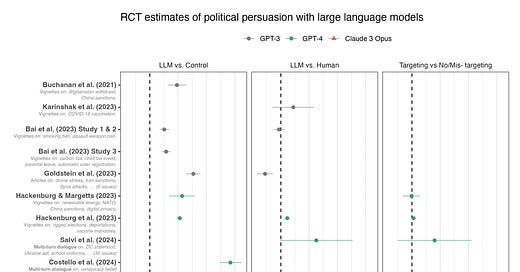

In the figure below, the authors present all known studies that randomized participants to receive LLM-generated political messages and assessed their attitudes afterward. For each study, they compute the simple difference in mean outcomes by condition, including 95% Confidence Intervals, to maintain consistency across the studies. However, they note that this approach may differ from the original analyses conducted by the papers’ authors. The studies vary in several aspects, such as the LLM model used (GPT-3, GPT-4, Claude 3 Opus), the treatment format (vignettes, articles, chatbot conversations), the reference conditions (experts, laypeople, and so on), and the political topics explored.

None of the three studies which directly measured the effect of targeted messaging on participant’s attitudes showed a significant difference between the impact of targeted and untargeted LLM messages: “These findings align with existing work suggesting that micro-targeted messages are rarely more effective than the single most persuasive message across the entire population, and suggest that at present, the use of LLMs for tailored political messaging may be less transformative than has been feared.”

In a functioning democracy, individuals are free to express a range of opinions and engage in deliberation with mutual tolerance and respect. While some worry that AI could be exploited to stifle political discourse, there is also optimism that AI could foster healthier spaces for citizen engagement. Machine learning tools are already employed to moderate content by detecting offensive, profane, or explicit messages online. However, LLMs may take this further by intercepting uncivil comments and suggesting they be voluntarily withdrawn or rephrased—potentially more efficiently and accurately than human moderators. In one study, LLMs intervened in political discussions between U.S. voters with opposing views on gun control, offering less adversarial rewording. Participants accepted the revised phrasing about two-thirds of the time, and when they did, improvements in conversation quality and democratic reciprocity (the respect for others’ right to hold differing views) were observed.

The authors also highlight that LLM interventions could potentially amplify the voices of individuals who are at risk of being marginalized in discussions. For instance, one study found that introducing LLMs into mixed-gender groups of Afghan citizens engaging in debates on sensitive political topics led to an increase in the diversity of ideas contributed by female participants.

In 2024, as numerous nations prepare for elections, there has been a growing concern that AI might be used to interfere with the democratic process. Fears are mounting that hostile entities, including foreign governments, could exploit AI-generated disinformation to manipulate political narratives. This might involve AI-driven “hack and leak” operations, where private accounts (such as emails) are breached to extract sensitive information, which can then be released to discredit political opponents or unfairly influence the outcome. For instance, the Russian intelligence agency Star Blizzard is suspected of having systematically targeted Western politicians and journalists, in addition to launching a direct assault on the UK Electoral Commission. Research has also highlighted how tools like ChatGPT could be employed to craft highly personalized spear phishing attacks, including those aimed at members of the British Parliament. There are clearly real concerns about this.

Some other interesting takeaways:

“LLMs also offer new opportunities for improving interactions among citizens in social media or debate platforms, by summarising opinions and optimising the routing of comments between discussants, and helping humans themselves become more effective conversation partners.”

“Whilst there is scant evidence that electoral outcomes were materially affected in these cases, the arrival of hyper-realistic generative content could threaten to rob news media of its “epistemic backstop” - the decisive authority that previously provided by a video or audio recording of a news event.”

“Over the longer term, repeated exposure to significant volumes of realistic deepfake materials could have a systemic effect on the population’s epistemic health. Users are more likely to believe information that is repeated, independent of its plausibility, and AI systems offer new and more targeted ways to deluge users with misleading content.”

“Given the ease with which such deepfake materials can be generated – using a short snippet of genuine audio and a few dollars – efforts to use generative AI to sow confusion among voters and officials could grow.”

“More reliable fact-checking services may soon be available, but when they arrive, we need to find ways to ensure that they have impact. For example, one study found that participants were just as likely to believe and to share content that ChatGPT had flagged as false as that which it had supposedly verified, and people generally mistrust ChatGPT as a source of political information.”

“AI is the transformative technology of the 21st century, and so it naturally has an impact on the materiality of democracy – the infrastructure that supports the democratic process in society.”

“AI may even start to draft entire pieces of legislation (even if based on human desiderata) – in November 2023 the legislature of Porto Alegre, Brazil, passed the first law written entirely by an LLM. If AI can help politicians respond better to citizen’s needs, this could bolster their perceived legitimacy as democratic representatives.”

“AI will present specific challenges to democracy at multiple levels: epistemic, material and foundational. However, AI also holds out potential affirmative opportunities.”

What I liked most about the article is that it is not all negative, sounding the alarm about how AI will harm democracy. The authors contend that AI can play a positive role in democracy by creating new opportunities to educate and engage with citizens, enhancing public discourse, helping individuals find common ground, and offering fresh ways to reimagine and improve democratic processes.

Amen to that.