Musing 75: Explaining Datasets in Words

Interesting paper out of UC Berkeley on how datasets can be described automatically with natural language

Today’s paper: Explaining Datasets in Words: Statistical Models with Natural Language Parameters. 13 Sept. 2024. https://arxiv.org/pdf/2409.08466

Today’s paper addresses the challenge of interpreting high-dimensional parameters in statistical models used for analyzing massive datasets. Traditional methods like clustering text embeddings often result in parameters that are difficult to interpret. To solve this, the authors introduce a family of statistical models parameterized by natural language predicates, making the model parameters inherently more interpretable.

It’s helpful to consider an example to contextualize this further. Instead of a cluster being represented by a set of embeddings, it can be described using a predicate like "discusses COVID." This approach aims to provide clear and meaningful explanations of model parameters, leading to a better understanding of the data.

To make model parameters directly interpretable, the authors introduce a family of models (Figure 1 above) where some of their parameters are represented as natural language predicates, which are inherently interpretable. Their core insight is that they can use a predicate to extract a 0/1 feature by checking whether it is true on a sample. Using these 0/1 feature values, they define a wide variety of models, including clustering, classification, and time series modeling, all parameterized by natural language predicates.

What is new about this approach? The authors’ algorithm heavily relies on the ability of LLMs to explain distributional patterns in data when prompted with datasets. Some prior work has prompted LLMs to explain differences between two text distributions. Others have explored prompting LLMs to generate topic descriptions over unstructured texts. Yet others have explored prompting LLMs to explain the function that maps from an input to an output, including by prompting them to explain what inputs activate a direction in the neural embedding space. However, these works focused on individual applications or models in isolation; in contrast, this work creates a unifying framework to define and learn more complex models (e.g. time series) with natural language parameters.

There’s a fair amount of math, and a couple more illustrative figures, in the rest of the paper explaining the framework more fully. It’s readable, although you do have to be conversant in this general line of work. I’ll intuitively try to describe the method as follows:

Imagine you have a large set of text data, and you use a statistical model to cluster this data. Normally, each cluster is represented by complex, high-dimensional parameters like numerical embeddings. These are hard to understand directly and don't tell you much about the nature of each cluster. Instead of relying on these hard-to-interpret parameters, the framework introduces a way to use simple, human-readable language to describe them. For example, rather than saying "this cluster has a certain numerical value," you could say "this cluster talks about COVID."

The authors’ framework creates a variety of models (like clustering, time series, and classification) where some of the parameters are described using natural language predicates. These predicates are essentially sentences or phrases that you can use to extract information from the data. For instance, a predicate might be "is sports-related," which helps identify whether a piece of text discusses sports. To determine the best natural language descriptions for different parameters, the framework uses a process that involves:

Continuous Relaxation: Starting with a rough idea of what each parameter might mean, the model uses a continuous approximation to optimize these descriptions.

Discretization: Once it finds a promising description, it refines it by prompting a language model (like GPT) to generate clearer, more specific phrases.

Iteration: It goes through multiple rounds of refining and improving these descriptions to make them as accurate and meaningful as possible.

Ultimately, the goal is to organize user dialogues into meaningful clusters, analyze how discussions evolve over time, and even explain visual features in images. The goal is to produce results that are not just accurate but also easily understandable and useful for humans.

Next, let’s get into results. The first experimental question that the authors explore is whether their method performs better than naïvely prompting language model to generate predicates. As the data in Table 2 below shows, across all entries, their approach significantly outperforms this baseline.

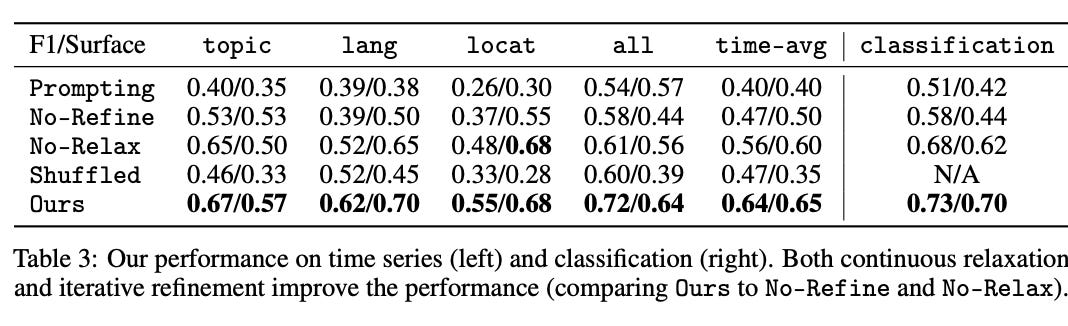

The authors also find that iterative refinement improves the performance (the No-Refine entry in the table above and below) i.e., a variant of their algorithm that only discretizes the initial continuous representations and does not iteratively refine the predicates.

Finally, the authors’ method accounts for information beyond the set of text samples (e.g. temporal correlations in the time series). They investigate this claim using the time series datasets, where they shuffle the text order and hence destroy the time-dependent information a model could use to extract informative predicates (Shuffled). Table 3 above finds that Ours is better than Shuffled in all cases, indicating that the method does make use of temporal correlations.

For me, the most interesting part of the paper is after the results, where the authors spend some time exploring ‘open-ended applications’. Two of these are found below, nicely explained visually.

In closing, this paper formalizes a broad family of models parameterized by natural language predicates. The authors design a learning algorithm based on continuous relaxation and iterative refinement, both of them effective based on ablation studies. Finally, they apply the framework to a wide range of applications, showing that it is highly versatile, practically useful, applicable to both text and vision domains, explaining sophisticated concepts that classical methods struggle to produce.